The essence of event processing

So, what exactly is event processing? And why has it become such a pivotal component of business processes in our digital ecosystem?

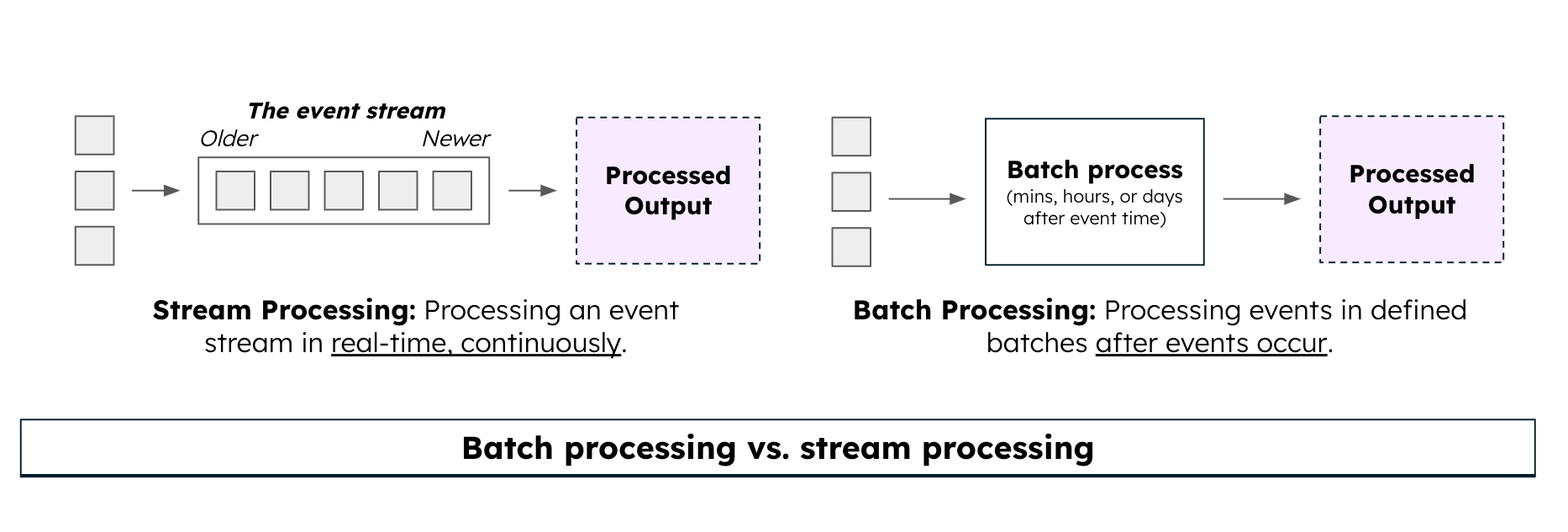

At its core, event processing is the continuous processing of event stream data. Event processing makes it possible to query, analyze, and react to time-sensitive events in real-time. This powerful mechanism underpins the real-time interactions and instantaneous responses users have come to expect in applications and services — whether it's making an online purchase, monitoring health metrics, or even just checking a bank balance.

Event processing is not just about passively capturing or observing streams of data as events occur. It's about actively analyzing these streams to detect patterns and interpret significant events, and then translating these process events into action. It can be as straightforward as a status change in a social media post or as intricate as monitoring fluctuations in global financial markets or identifying unusual patterns in vast datasets.

One might wonder, Why is event processing so crucial? The answer lies in its ability to offer real-time insights and facilitate immediate actions.

In today's fast-paced digital realm, waiting for batch process results or relying on manual interventions can not only reduce efficiency but can also expose businesses to risks and missed opportunities. Event processing sidesteps these pitfalls. It ensures systems are always alert and primed to respond to any change, from the first event notification to a sudden spike in user engagement to an emerging security threat or a customer transaction.

As the digital fabric of our world has become denser and more intricate, the volume of business events that demand monitoring and management has skyrocketed. Traditional data processing techniques, which often operate in silos and with inherent delays, are increasingly proving inadequate for this new reality. This is where event processing, armed with its real-time capability, shines. Event processing ensures businesses remain nimble, proactive, and consistently a step ahead in an ever-changing digital landscape.

Navigating event processing challenges

While event processing powers real-time data management and analysis, it's not without its challenges. For those responsible for ensuring its seamless operation, the journey can be demanding.

Developers often find themselves overwhelmed by the technical intricacies of event stream processing solutions. Navigating disparate languages, APIs, drivers, and tools to bring streaming data (from sources like Apache Kafka™) into their applications creates a fragmented development experience and operational complexity, with additional systems for teams to manage.

While event processing offers a world of possibilities in real-time data management and analysis, it also presents new challenges. For developers and businesses alike, understanding and navigating these challenges is crucial to harnessing the full potential of event processing and ensuring robust, efficient, and responsive systems.

Atlas Stream Processing: a game-changer

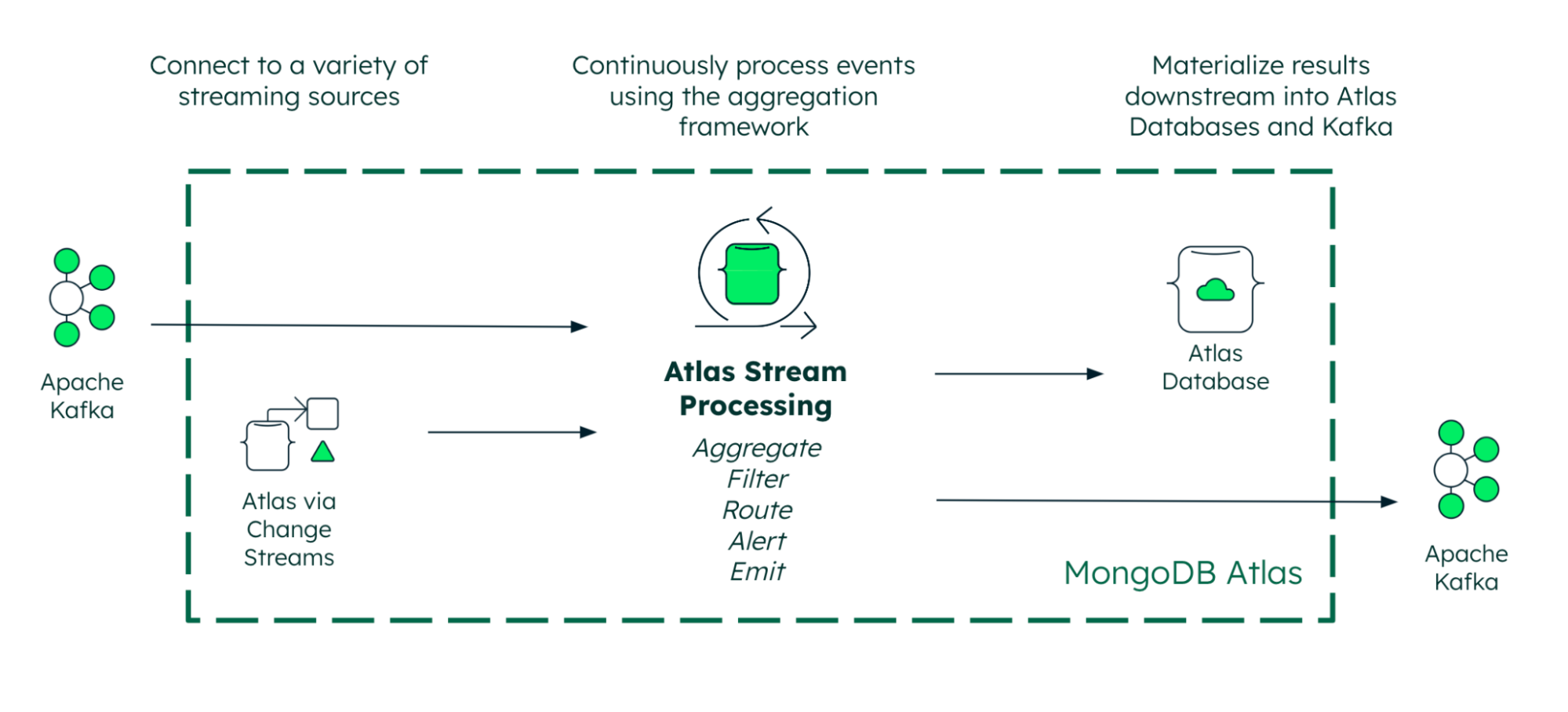

Atlas Stream Processing is the MongoDB-native way to process streaming data, transforming the way developers build modern applications.

Developers can now use the document model and the MongoDB aggregation framework to process rich and complex streams of data from platforms such as Apache Kafka. This unlocks powerful opportunities to continuously process streaming data (e.g., aggregate, filter, route, alert, and emit) and materialize results into Atlas or back into the streaming platform for consumption by other systems.

Atlas Stream Processing makes stream processing a native capability of Atlas, unifying the developer experience of working all data required to power modern applications.

With Atlas Stream Processing, developers can perform:

- Continuous processing: Build aggregation pipelines to continuously query, analyze, and react to streaming data without the delays inherent to batch processing.

- Continuous validation: Check that events are properly formed before processing, detect message corruption, and detect late-arriving data that has missed a processing window.

- Continuous merge: Materialize views into Atlas or streaming systems like Apache Kafka.

Ready to dive in? Explore Atlas Stream Processing now.

How Atlas Stream Processing works

Let's dive deep into the mechanics and features of Atlas Stream Processing.

Seamless integration with key data sources

At the heart of Atlas Stream Processing is its ability to effortlessly integrate with key sources of data. Atlas Stream Processing can consume data from Apache Kafka or any MongoDB Atlas collection through change streams, ensuring data doesn't just flow — it flows continuously and without interruption. The result is a dynamic, event-driven data and event stream model that's both robust and reliable.

Real-time data processing

In today's digital age, real-time responsiveness is not a luxury; it's a necessity. So Atlas Stream Processing has been engineered to enable processing data in real-time. As data flows through Apache Kafka or MongoDB Atlas, it can be efficiently processed using the MongoDB Query API, ensuring applications and end-users benefit from instantaneous insights and actions. This real-time processing capability translates to enhanced user experiences, faster decision-making, and a competitive edge in the market.

The benefits of Atlas Stream Processing

In today's dynamic digital environment, businesses are constantly seeking tools and platforms that can elevate their operations and enable innovation. Atlas Stream Processing offers a few key advantages for the developer experience:

- Built on the document model: This allows for flexibility when dealing with the nested and complex data structures common in event streams. It alleviates the need for pre-processing steps while allowing developers to work naturally and easily with data that has complex structures.

- Unified experience of working across all data: By offering a single platform — across API, query language, and tools — developers can process rich and complex streaming data alongside the critical application data in the database.

- Fully managed in MongoDB Atlas: Atlas Stream Processing builds on an already robust set of integrated services. With just a few API calls and lines of code, you can stand up a stream processor, database, and API serving layer across any of the major cloud providers.

Ready to dive in? Explore Atlas Stream Processing now.