The 5-Step Guide to Mainframe Modernization for Banks

April 21, 2022 | Updated: June 6, 2022

Enriched, convenient, and personalized are the watchwords for any business building a modern, digital customer experience. It’s no different for traditional retail banks, especially as they try to fend off challenger banks and design their own online banking and in-branch experiences to win new business and retain existing customers.

But in order to beat the competition and build experiences that best those offered by neobanks, established retail banks need to master their data estate. Specifically, they need to free themselves from the rigid data architectures associated with legacy mainframes and monolithic enterprise banking applications.

Only then can established banks have their developers get to work building high-quality customer-facing applications rather than managing thousands of SQL tables, scrambling to rework schema, or maintaining creaky legacy systems.

The first step on this journey is modernizing the mainframe.

Enriched modernization in 5 phases

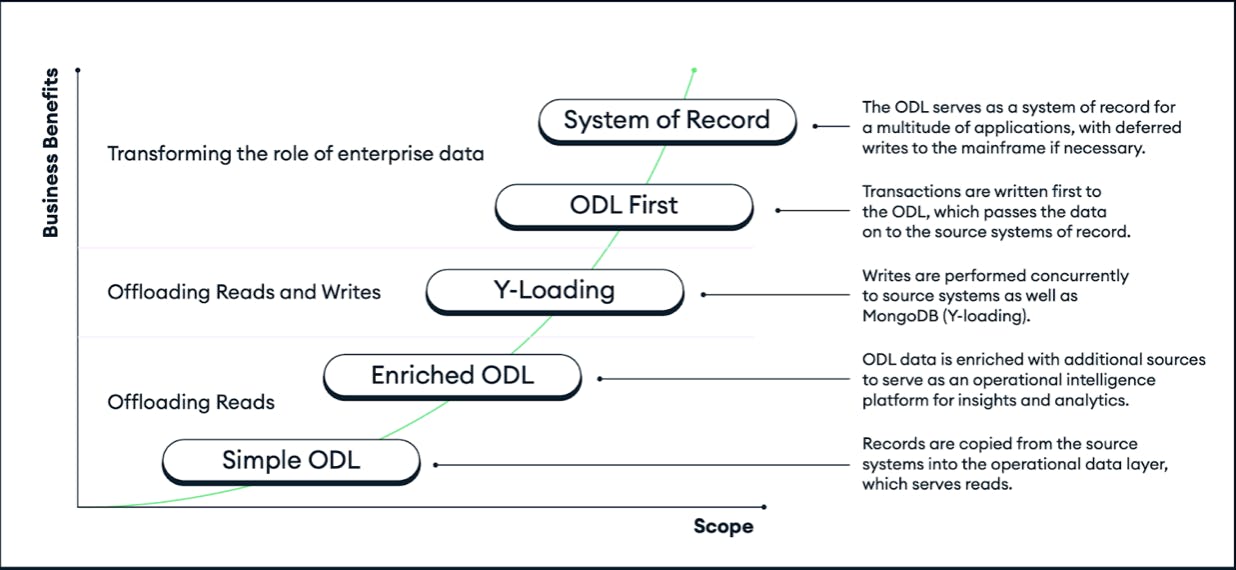

The best way to modernize is through a phased model that uses an operational data layer (ODL).

An ODL acts as a bridge between a bank’s existing systems and its new ones. Using an ODL allows for an iterative approach, allowing banks to see progress toward modernization at each step along the way while still protecting existing assets and business-critical operations.

Banks can see rapid improvements in a relatively short amount of time while preserving the legacy components for as long as they’re needed to keep the business running. MongoDB’s five-phase approach to modernization enables banks to modernize iteratively while balancing performance and risk.

If banks are eager to modernize and their customers are demanding modern banking experiences, what’s taking banks so long to move away from the legacy systems that are restricting their ability to innovate? And why do so many legacy modernization efforts fall short? Download The 5 Phases of Banking Modernization to start plotting your path forward.

Mainframe modernization techniques

With an ODL, the legacy infrastructure can be switched off piece by piece and retired as more functionality is added. In this scenario, database operations become much more efficient because objects get stored together rather than in disjointed locations. Reads are executed in parallel via the nodes in a replica set. Writes are largely unaffected.

To bring similar benefits to writes, banks may choose to implement an ODL with sharding and regional shards, bringing writes closer to the actual user. Workloads can then be gradually moved from legacy systems to the ODL, with the ultimate goal to decommission the legacy system.

The beauty of this approach to modernization is that it starts with the use case: What problems does the bank face in its data management and what functionalities are customers requesting?

If the first priority is giving customers access to historical transaction data, then banks can tackle that problem immediately by building a repository (or domain) to offload customer data from the mainframe. If the priority is cost reduction, then an ODL can act as an interim layer, allowing applications to access the data they need without the need to run expensive queries against mainframe data.

The advantages of an ODL

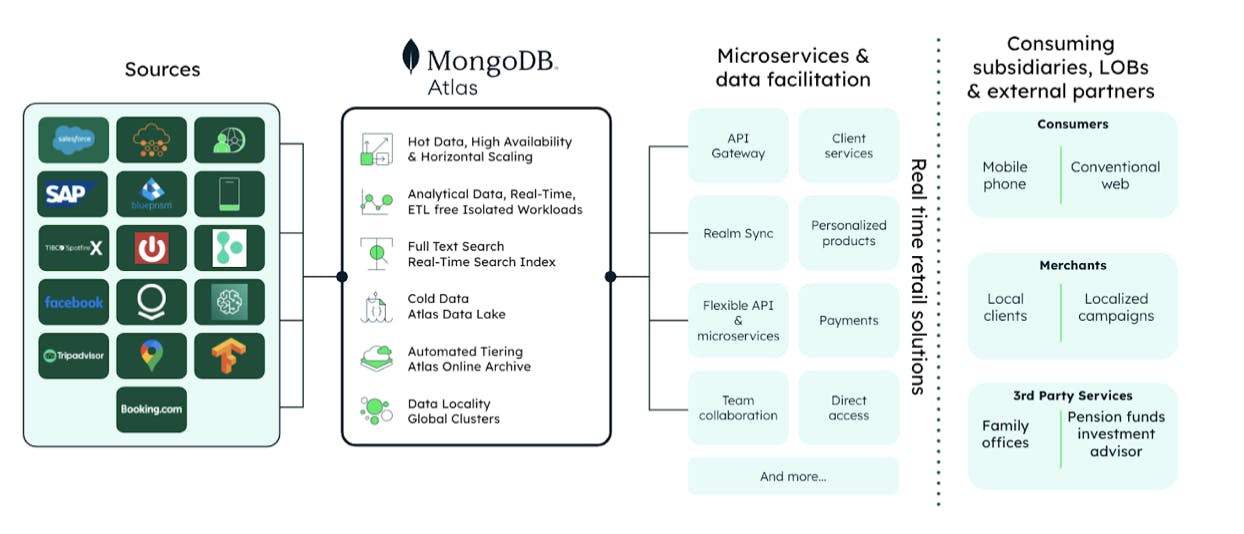

MongoDB is ideal for connecting legacy mainframes and databases to newer architectures, such as a data mesh, by way of an ODL. An ODL has a number of advantages. Combined, these advantages make data massively easier to access and use — and applications easier and faster to build.

-

An ODL allows an organization to process and augment data that resides in separate silos, and then use that data to power a downstream product, such as a website or an ATM. With an ODL, data is physically copied to a new location. A bank’s legacy systems remain in place, but new applications can access data through the ODL rather than interacting directly with legacy systems.

-

An ODL can draw data from one or many source systems and power one or many consuming applications, unifying data from multiple systems into a single real-time platform.

-

An ODL relieves the mainframe of workloads. One useful by-product is in avoiding consumer service interruptions brought about by maintenance windows on legacy systems, like Oracle Exadata.

-

An ODL can be used to serve only reads, accept writes that are then written back to source systems, or evolve into a system of record that eventually replaces legacy systems and simplifies the enterprise architecture. Because of its ability to work with legacy systems, or to gradually replace them, and its ability to support an evolutionary approach to legacy modernization, many banks find that an ODL is a critical step on the path to full modernization of their enterprise architecture.

In terms of architectural setup, some banks may want one ODL for each of their data domains but others may find certain domains can share an ODL. The ODS/ODL template can be applied in a variety of ways — without breaking the bank’s internal standards.

For example, imagine an ATM terminal connected to a MongoDB-based ODL. With the ODL in place, data from the mainframe is replicated in real time and made available for the consumer to check their most recent transactions and account balance on the ATM. Customer balance information, however, also still resides on the source system.

Using the ODL to replicate and display information from the mainframe avoids customers having to face annoying delays while they wait for the information from a mainframe to load. At the same time, risk management and regulatory reports can still be run against a mainframe as a batch “end of day” process.

With an ODL in place, data can flow from the mainframe to a newer architecture, giving the ATM broader capabilities that expand customers’ banking experiences, such as the ability to pay invoices, change addresses, or even open additional accounts.

-

Nightly batch, bulk load, or real-time updates: MongoDB is flexible enough to connect to any data source, be it classic DB2 for zOS, Oracle, SQL Server, Hadoop-based legacy, or even Excel spreadsheets. MongoDB has the appropriate connectivity to ingest any data at any time from anywhere.

-

Enrichment, data domains, and data marketplaces: With its document data model, MongoDB has the capability to bring data into data domains versus using convoluted table schema and ETL processes. The domains emerge naturally based on the application and user community requirements.

-

Security, schemas, and validation: MongoDB has multiple layers of security, including password protection over encryption in flight and at rest, plus granular field-level encryption. All with external key management.

Take the next step in mainframe modernization

Because many core banking capabilities are transactional and can be handled with daily batch processing, mainframes remain the backbone of our financial system. Mainframe modernization might sound daunting, but it doesn’t have to be. Banks can choose to proceed along a straightforward and predictable path that allows them to modernize iteratively. They can receive the benefits of modernization in one area of the organization even if other groups are earlier in their modernization path.

It’s possible to do this while supporting increasingly complex data privacy regulations and, importantly, minimizing risk.

Banks and other financial institutions that have successfully modernized have seen cost reductions, faster performance, simpler compliance practices, and rapid development cycles. New, flexible architectures have accelerated the creation of value-added services for consumers and corporate clients.

If you’re ready to learn more about how you can accelerate your digital transformation and minimize risk, “The 5 Phases of Banking Modernization” now.