Breaking the WiredTiger Logjam: The Wait-Free Solution (2/2)

Part one of this pair explored the original algorithm the WiredTiger write-ahead log used to consolidate writes in order to minimize IO. It used atomic compare-and-swap operations in two phases to accomplish this without time-consuming locking. This algorithm worked extremely well as long as there were no more than a few threads running per core. But its reliance on busy-waiting to avoid locking caused a logjam when the number of threads increased beyond that limit -- a serious problem given that many MongoDB workloads would have a large number of threads per core. This issue was blocking MongoDB’s goal of making WiredTiger the default storage engine in v3.2.

This story has a happy ending thanks to my colleague, Senior Technical Service Engineer Bruce Lucas. Bruce had initially uncovered the logjam and reported it to me; together, we overcame it without compromising any other workloads. Because Bruce’s mindset was not colored by the legacy of the original approach, he was able to provide the critical insight that paved the way for the solution, allowing WiredTiger to become the default storage engine in v3.2.

Why did threads have to wait?

It was the summer of 2015, and I was trying to eliminate the logjam Bruce had uncovered. I was working on improving performance by reducing thread contention, but I was making only incremental progress. Bruce, on the other hand, went in a very different direction. He questioned the very need for threads to wait before copying their payloads, and he set about writing a prototype to prove they didn’t have to.

We can't prohibit MongoDB from using a lot of threads -- it's designed to do that. Nor can we mandate that it only run on massively multi-core machines. Using mutexes was an absolute non-starter. Bruce knew those were dead-ends, so he scrutinized the conceptual building blocks of the algorithm:

-

Claiming a place in a

slot(which requires atomicity) can be decoupled from copying to it (which can be done in parallel). -

Claiming a place in a

slotatomically, which we call joining, can be done via compare-and-swap operations on an index variable. -

That index is identical to the count of total bytes claimed, so we call it the

joincounter. -

A slot can’t be written to the OS until all the threads that have joined it have actually performed their copies, so slots have to track when threads complete their copies.

-

Tracking bytes written can be done with atomic operations on a release counter. When its release == join, a slot is ready to be written to the OS.

-

We must also track a slot’s state, so threads know when it is unavailable, joinable, ready to write to the OS, etc.

Individually, none of these items require threads to wait; the need arises from their interaction. In order to safely write a slot to the OS, for example, a thread has to determine that the slot’s state allows it, and that any thread that has claimed a spot in its buffer (join) has completed copying its data (release) -- and this three component check must be done atomically.

The problem is that CPUs do not allow atomic operations involving more than two registers. (Footnote: Theoretically they could, but in practice none do.) If join, release, and state were tracked in separate variables, we could compare state with a READY_TO_WRITE value, or join with release, but not both at once.

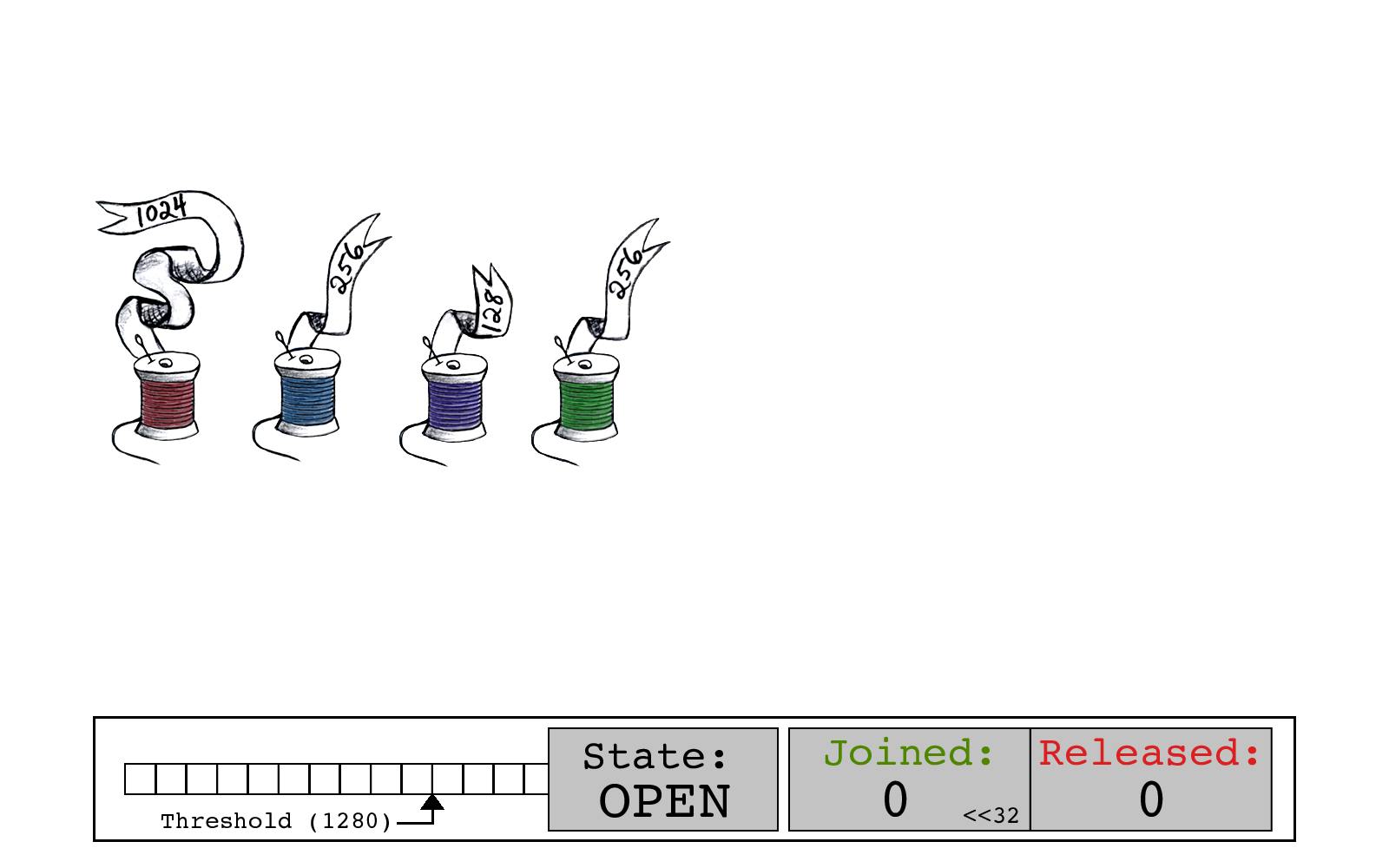

Thus, to implement the atomic operations, a single register (variable) must be used to multiplex a slot’s state along with the bookkeeping about joined and released bytes. This is precisely the slot_state field described in part one.

It is tempting to allow threads to increment slot_state as they join and decrement it as they release, but item #2 on our list forbids it: slot_state must always point to the next free byte in the buffer. Allowing a thread to decrement slot_state before joins are complete would point slot_state at memory that was already claimed by other threads. Keeping an independent pointer into the buffer that only increments would solve that issue, but it would defeat atomicity.

In summary: the need for atomicity constrains us to using a single variable, and the need to track where threads can write means we cannot mingle increments and decrements. Therefore we must have two phases. In the join phase, threads claim space, but must then wait for the release phase to begin; in the release phase, threads write to the space they claimed and mark their bytes written.

An epiphany

If only we could maintain two separate counters for join and release, we could eliminate the need for threads to wait. We could let them write into the slot as soon as they received their write offset from their join operation.

But Bruce noticed something critical: these counters fit easily within 32 bits, so they could both fit inside an int64. We could logically split a single register into the pieces necessary to maintain all of the required information: the slot state and the two counters.

With this scheme, we can implement joins and releases with masking and bit-shifting, which Bruce cleaned up using a few macros:

// put together and pull apart two 32-bit counters from 64-bit slot state

#define JOINED_RELEASED(joined, released) (((joined)<<32) + (released))

#define JOINED(state) ((state)>>32)

#define RELEASED(state) ((int64_t)(int32_t)(state))

// a simple join:

old_state = slot->state;

offset = JOINED(old_state);

old_release = RELEASED(old_state);

new_state = JOINED_RELEASED(offset + my_size, old_release)

// Now atomically set old_state, new_state

Bruce wrote a program demonstrating this method, not using any WiredTiger or MongoDB code, to test his idea. He simulated the multithreaded load and ran the numbers, and the results encouraged him to whip together a patch for WiredTiger. In proof-of-concept mode, he ignored all the details: rolling over journal files, records that are too large to store in a buffer, errors and timeouts that interrupt the flow of data, and more. Bruce skipped all that, but his patch was enough to prove that even in the context of a server doing lots of other processing, his optimization to the write-ahead log was substantial.

Without the need to wait for the join/release phase change, threads can claim a spot, write their payload, record their bytes written, and leave, without ever waiting. This implementation does away with the need for a "leader" thread and divvies up the responsibilities between two threads. When a thread performs a join that fills the buffer, it closes the slot and prepares a new one. When a thread’s release completes with no other pending writes and a full buffer, it writes the buffer to the OS.

Going from POC to production code

When Bruce sent me his first patch, I was hopeful but a little bit daunted! His solution attacked the problem from an angle that I had never even considered, but there were so many details that were not accounted for; it would be a lot of work to reconcile with the existing write-ahead logging code. But the performance improvements were so significant, it was clearly worth trying to make it work. I set about filling in the gaps. Bit by bit, over the next couple of weeks, I addressed the complexities. As I made my way down the list, my cautious optimism became out-and-out enthusiasm, until finally I had a fully realized write-ahead log implementation using the new method.

Together, the code for joining, copying and releasing now looks something like this:

/* Join my record size into the existing slot */

old_state = slot->state;

new_state = old_state + join_state(my_size, &my_offset);

/* Retry if we race on the atomic operation */

if (!atomic_cas(slot->state, old_state, new_state))

go retry reading old_state;

/* Prepare a new buffer if this one is full */

if (my record fills buffer)

close and switch slot

/* Copy my record */

memcpy(buffer + my_offset, my_record, my_size);

/* Release my size after copy */

old_state = slot->state;

new_state = old_state + release_state(my_size);

if (!atomic_cas(slot->state, old_state, new_state))

go retry reading old_state;

/* If buffer is full and I’m the last to finish, write */

if (buffer is full and my release is the last one)

write_buffer_to_OS();

An important detail: idle systems

Because filling the slot’s buffer is the trigger to write the records to the OS, the algorithm works well with a steady flow of incoming records. But if a system goes idle, any records in a current unfilled buffer will sit unflushed until either enough writes come in to fill the buffer, or a write using j:true forces a sync. While technically the records in that buffer were written explicitly without durability guarantees, records should not remain unflushed while a system is idle! To address this, we added a 50-millisecond idle timeout that pushes the buffer to the OS, limiting how long a record is exposed to the risk of a process crash and MongoDB syncs to disk every 100-milliseconds to limit the risk of system crash.

It's much much faster

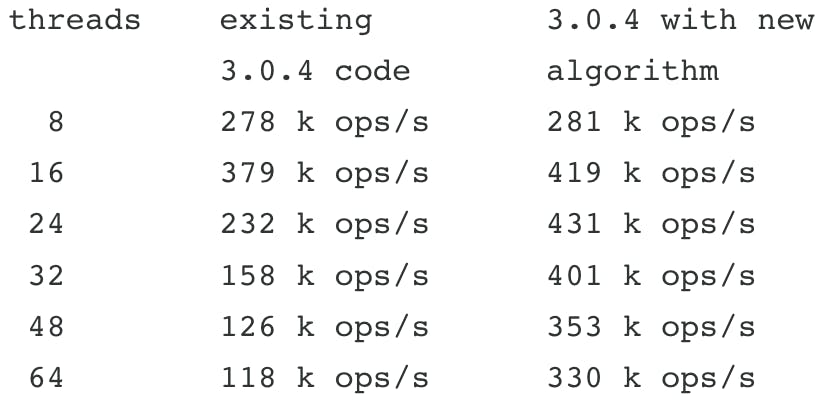

Measurements of the problematic workload against production code were very exciting:

We had nearly tripled performance of the journal algorithm, and had almost entirely eliminated the negative scaling at high thread counts without harming performance at low thread counts. The WiredTiger team has a standard suite of benchmarks, and some of those benefited more than others, but none were penalized by the changes.

Final thoughts

The impact of code changes at the storage layer are often undetected, as they are eclipsed by the overhead of the many layers above. The opportunity to have conspicuous, user visible improvements like this are rare, and offer a particularly novel variety of job satisfaction.

Finally, optimizing code for a particular set of conditions does more than specialize the code -- it also specializes your thoughts. As your thoughts bore deeper and deeper into the problem space, they leave tracks, which become trails, and eventually paths, which your thoughts then naturally continue to follow. So when you encounter a need to make code suit a completely new environment, it helps to have a friend who isn't influenced by your preconceptions.