SQL Migrations Made Easy with PeerIslands SQL Migration Factory

More and more customers are migrating their SQL workloads to MongoDB due to multiple advantages offered by MongoDB. The advantages of MongoDB Atlas include but are not limited to :

-

Increased development velocity

-

Intuitive data representation using the document format

-

Horizontal scalability

-

High performance

-

Elimination of point solutions and simplification of data landscape

PeerIslands SQL Migration factory brings together deep hands on keyboard expertise in migrating SQL workloads and a complete suite of tools for handling each stage of the SQL migration journey. PeerIslands SQL migration factory aims at providing a seamless experience to customers looking to migrate out of SQL DBs into MongoDB.

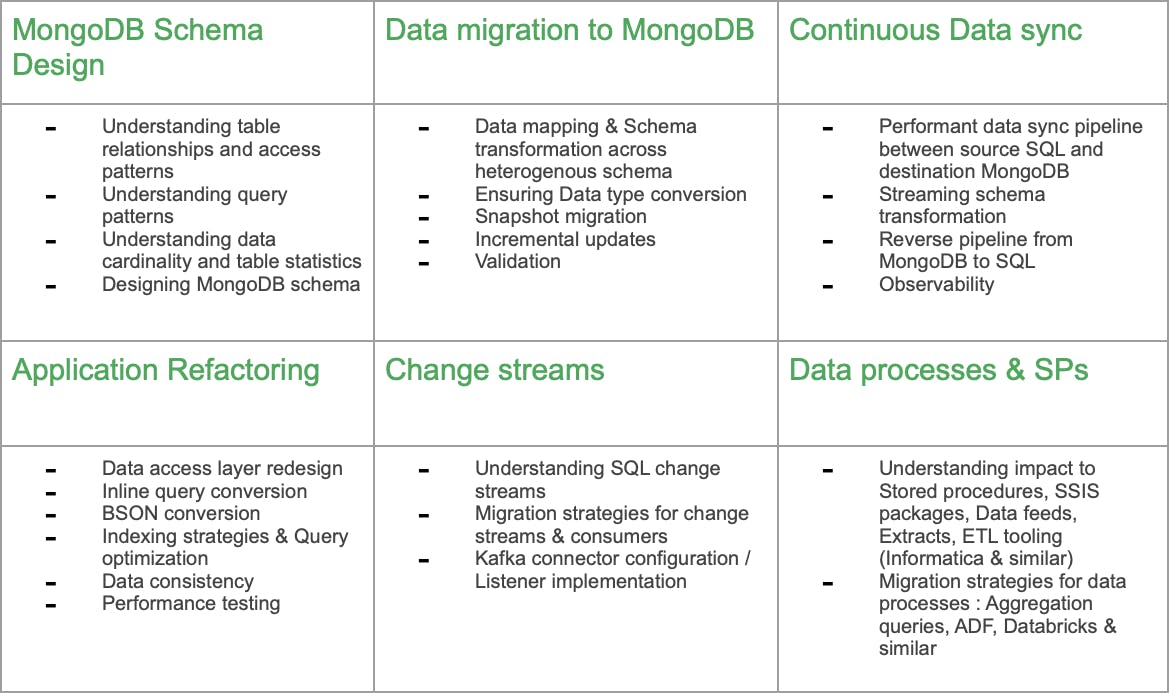

The dimensions of a typical SQL migration

SQL migrations require a holistic approach across multiple facets. The typical areas which we need to address as part of migrations are below:

PeerIslands has helped multiple customers across each of these dimensions. Based on our experience with customers, PeerIslands has created a suite of tools to help address common problems faced by customers.

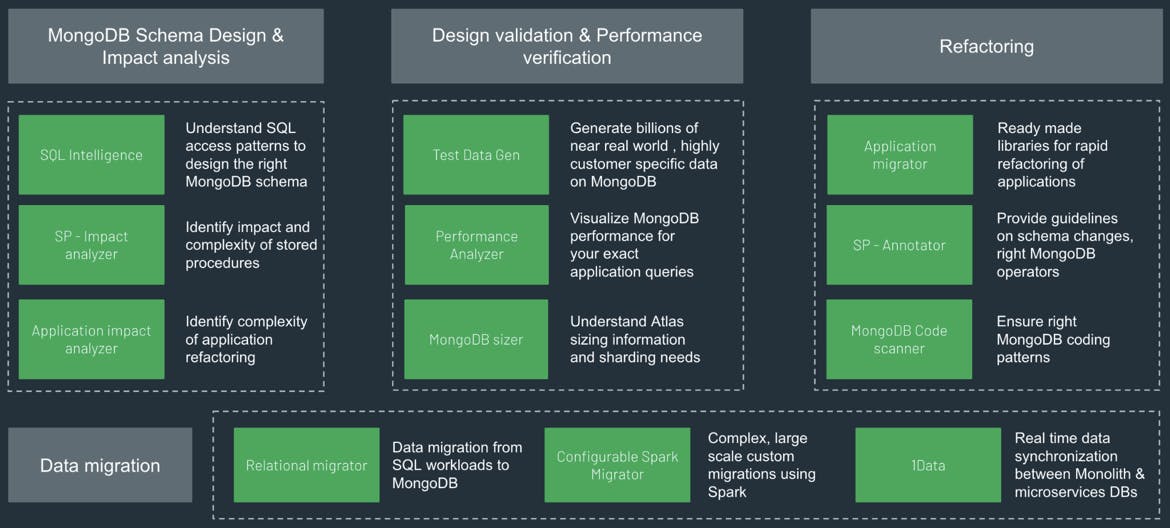

PeerIslands SQL migration tool suite

PeerIslands has developed a portfolio of tools that can help you with each stage of the SQL migration journey.

MongoDB schema design

We have seen customers struggle with developing the right MongoDB schema when the number of source tables exceeds even 10s of tables. Understanding the lay of the land and the access patterns takes significant time commitment from the SMEs and MongoDB team. The below tools help get a clear understanding of the SQL footprint and get guidance on developing the right MongoDB schema very quickly.

SQL intelligence

This tool provides rich consumable insights of the source SQL workload. The insights help in designing target MongoDB schema accurately. The tool analyzes 2 broad areas:

SQL data profile

The tool queries against the source SQL profile to derive:

-

List of tables, no of columns and row count

-

Table relationships (1 to 1, 1 to N, M to N)

-

Read heavy tables, Write heavy tables

-

Cardinality of relationships

The tool does this by querying the Data dictionary tables / Information schema and indexes.

Data access profile

The tool analyzes the SQL query logs to provide information about

-

Frequently joined tables

-

Frequently queried fields

-

Types of joins

The tool does this by running a parser on SQL query logs

Based on the insights gathered from these tools, we can rapidly design the target MongoDB schema.

Application impact analysis

One of the core impediments to a successful migration is not having a good sense of the effort and complexity of migrating to MongoDB. We have seen customers struggle on how to handle stored procedures that they have developed over the years. Customers also find it helpful to get a sense of how much code refactoring is involved when they move to MongoDB. PeerIslands has tools that address both these issues:

Stored procedures analyzer

This tool provides rich consumable insights about the stored procedures. The insights help in designing the right approaches for migrating the stored procedures.

The tool analyzes the SQL stored procedures to provide information about:

-

Frequently joined tables

-

Frequently queried fields

-

Types of joins

-

Operators used : Logical, relational and others

-

Subqueries

The tool does this by running a parser on the stored procedures. The tool also annotates each of the stored procedures with corresponding MongoDB operators.

Application analyzer

Applications that have a clear DAL layer are easier to migrate to other databases. However actual production applications can have multiple deviations from a standard architecture pattern.

We look at the application code to identify:

-

Entity models

-

Inline SQL queries

-

Data type conversions required

-

Date formats

-

Number of APIs

-

Access patterns

We leverage existing cyclometric analysis tools that customer may already have to understand the complexity.

These tools helps is getting a clear understanding of the amount of effort required to migrate application code.

Design validation and performance analyzer

One of the common asks from customers is to verify MongoDB performance for their particular use case. Customers want to simulate specific nuances around workloads, schema and queries. This is required to build confidence on MongoDB internally within the organization and to clearly understand & articulate cost performance benefits of MongoDB to other stakeholders. The below set of tools help address this critical need.

Test data generator and performance analyzer

Test data generator is a solution accelerator that helps customers generate large volume, customizable, close to real world test data for specific customer schemas.

The tooling also helps performance test MongoDB with custom load profiles and run specific queries against the data to understand MongoDB performance. This helps us understand the performance of specific application queries and fine tune MongoDB schema / indexes appropriately.

Key highlights of the solution are given below:

-

Test data generator & Performance analyzer is one integrated tool - test data generation, load profiling and testing for performance are all available as part of the tooling. There is no need for multiple tooling.

-

The solution generates close to real word data

-

Test data is customizable to specific customer schema

-

With load profiling, you can test MongoDB for specific load testing scenarios

-

MongoDB queries that are used in the application can be tested for performance under specific load conditions

MongoDB Sizer

MongoDB Sizer helps you define your own MongoDB schema and understand the size implications of that schema.

MongoDB Sizer is a fully UI driven tool. Using the tool, we can:

-

Define our schema or import a MongoDB schema

-

Provide sample documents and look at document sizes

-

Define indexes and collections

-

Provide number of documents expected

-

Calculate raw data size

The sizer also takes into account various parameters that drive Atlas sizing like IOPS, RAM and storage to calculate Atlas instance sizing. We will be able to understand the number of instances that are required, the cost implications of those instances as well as the number of shards that we need to have.

With the MongoDB sizer, users can get clear visibility into Atlas topology that will work for them.

Application refactoring

Application migrator

The application migrator is a set of libraries that ease migrating applications to use MongoDB. The libraries help with:

-

Quickly bootstrapping read / write interfaces with MongoDB collections

-

Decorators for data type conversions from SQL to MongoDB

-

Support for feature flag implementations to help in risk mitigated migrations.

The libraries can help with 2 modes of migration:

-

Dual database approach - SQL and MongoDB DBs coexist for a period of time. A subset of data resides in SQL while another subset of data is persisted in MongoDB. This is required in situations where mission critical data is in SQL and one time migration will be very risky.

-

One shot migration - Complete cutover of SQL DB and the data will be persisted only in MongoDB.

Stored procedures annotator

The annotator helps address one of the major pain points with SQL migrations : Conversion of stored procedures. The annotator takes a SP as a input and provides you with :

-

MongoDB operators that are equivalent to the given SQL operators.

-

Target collection names & attribute names for the given SQL table names. This is based on the schema mapping between SQL and MongoDB.

The annotator helps in rapidly rewriting the existing stored procedures into MongoDB queries.

MongoDB code scanner

In large enterprises, development teams are not fully conversant with MongoDB and consequently do not use the right coding practices on MongoDB. The MongoDB code scanner is a Semgrep based tool that highlights MongoDB coding issues. This helps the developers in understanding the MongoDB coding best practices and also prevents bad code from getting into production.

Data migration & synchronization

Migrating from a SQL store to MongoDB brings multiple challenges:

-

Migration requires code for schema transformation. This code can become quite complex.

-

Ongoing change capture requires custom programming.

-

Handling dependencies between migration jobs and managing migration infrastructure can become cumbersome.

-

Validation of migrated data takes significant time if the record set becomes large.

The MongoDB Relational migrator addresses all these pain points and can be used to rapidly perform the data migration from SQL to MongoDB

Spark migrator

Mainframe databases like DB2, Big data stores like Hadoop are not supported yet on Relational migrator. We have also seen cases where transformations can become very complex - multi level hierarchical mapping on SQL DBs etc. For these cases PeerIslands has built a fully configuration driven Spark based migration tool which can be used to support migration from any Spark supported source databases.

1Data

For microservices transformation, there is a frequent need to perform real time data synchronization between source SQL workloads and MongoDB for extended periods of time. In some cases we would also need reverse data pipelines from MongoDB to SQL workloads. PeerIslands has done extensive work with customers on building real time data pipelines and brings to the table field tested architectural patterns for addressing this requirement. This complete suite of tools enables PeerIslands to rapidly deliver on SQL migrations.

Typical engagement approach

PeerIslands follows a standardized approach designed to mitigate migration risks and deliver seamless migration experience. We follow a 4 step process that provides:

-

Migration to MongoDB using MongoDB best practices.

-

Getting migrations First time right.

| Stages | Pre Consult | Discovery | Solution blueprint | Migration factory |

|---|---|---|---|---|

| Description | Understand scope of migration and define pre requisites | Leverage PI tools for application and data analysis | Develop and validate solution blueprints for various components of the migration Finalize migration strategy and end state architecture |

Implementation of solution blueprints and solution delivery |

| Deliverables | Migration scope definition

Pre requisites definition |

SQL footprint analysis Stored procedures analysis Understand application complexity Workload characteristics NFRs | Target MongoDB schema

Data migration strategy

Target technical architecture

SP migration strategy

Services refactoring templates & PoC

DAL layer refactoring templates & PoC

Data pipeline PoCs Detailed work package plan & estimates |

Application development Data migration Data validation Performance tuning |