At Ignite, MongoDB announced a new milestone in their relationship with the integration of MongoDB Atlas with Microsoft Azure OpenAI Service. This significant integration is a testimony to the commitment of our collaboration and serves as a milestone to help customers on the journey of building generative AI applications grounded in enterprise data, providing for rich and meaningful user interactions.

Advanced AI and rich data: Best of both worlds

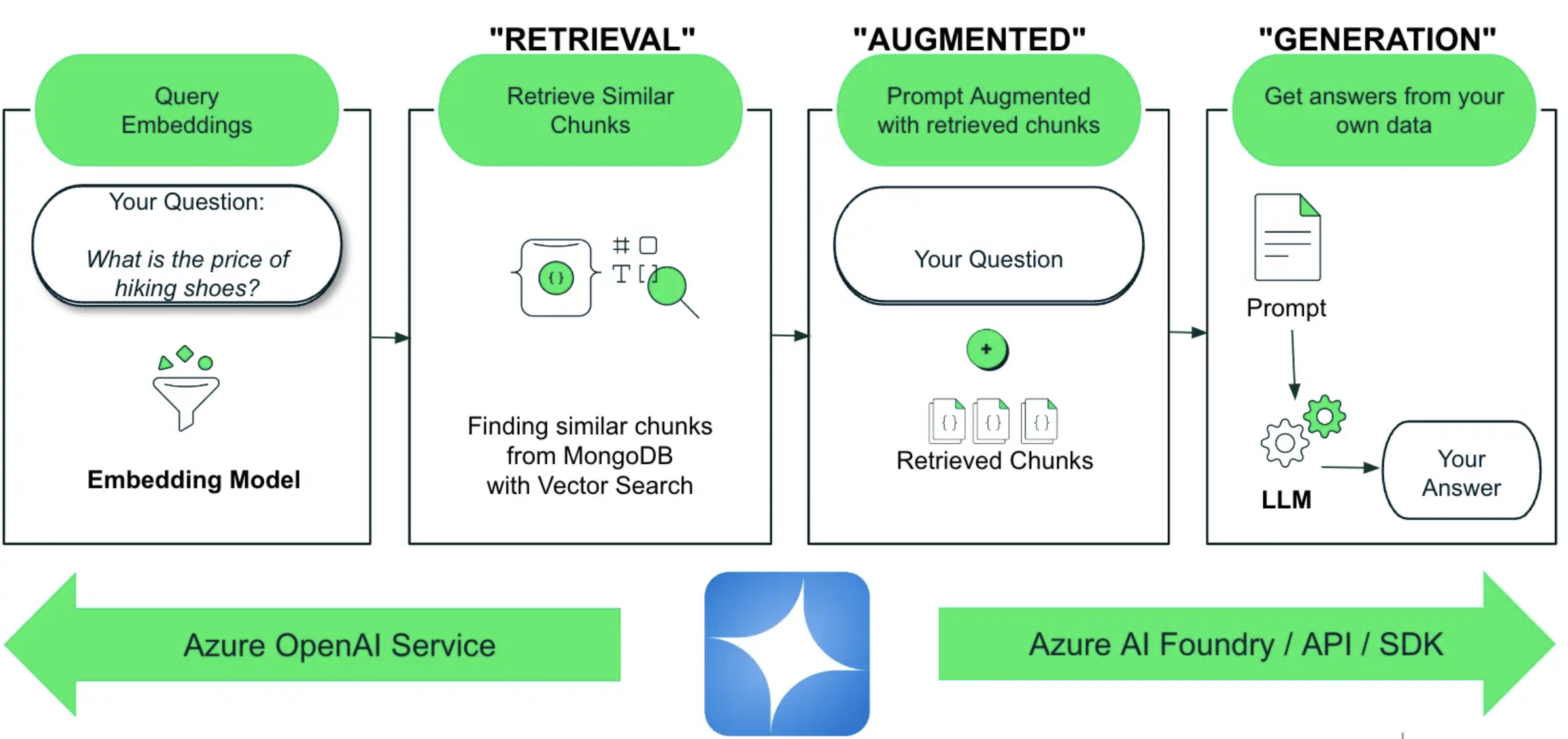

Retrieval-augmented generation (RAG) is becoming the norm for generative AI applications. Without RAG, large language models (LLMs) can only answer based on the public data which they have been trained upon. This is a significant limitation and inhibits the use of these LLMs for production use cases. RAG helps overcome this challenge by grounding the LLM in the enterprise-owned data, which the LLM otherwise had no knowledge of.

Azure OpenAI Service helps to build state-of-the-art generative AI applications by choosing powerful models such as GPT-4o and adding more context to the LLMs from enterprise data, which now can be from MongoDB Atlas, to get more relevant and insightful conversations. Azure OpenAI Service is available as an API, SDK, and in Azure AI Foundry. Thus, customers using Azure AI Foundry can now add a MongoDB Atlas vector index in the chat playground and evaluate the LLMs with the vector data stored in MongoDB Atlas collections. The API can be directly invoked from applications to easily build RAG applications grounded in MongoDB Atlas data.

Azure AI Foundry is a good starting point for enterprises aiming to build innovative generative AI applications in a secure and responsible way. The model catalog in Azure AI Foundry is a hub of models from Azure OpenAI Service and other leading providers, offering foundation, open, task, and industry-specific models. AI Foundry supports the entire lifecycle of designing an AI application starting from model evaluation and benchmarking, customization of the application, flow creation, and debugging, to managing generative AI applications without compromising security and following responsible AI best practices. On the other hand, MongoDB Atlas is one of the world’s most popular document model databases with native vector search capabilities. MongoDB Atlas Vector Search was recognized by the Retool Survey as a leader with the highest Net Promoter Score (NPS) in 2023 and 2024. MongoDB Atlas stores vector data alongside operational data without any additional overhead, owing to its inherently flexible document data model. MongoDB Atlas supports full text and hybrid searches along with vector search on dedicated search nodes in Azure which ensures that operational workloads are not impacted by generative AI application workloads.

MongoDB Atlas with Azure OpenAI Service: RAG

MongoDB Atlas is preferred as a vector database for RAG applications as it can store vectors seamlessly with the rest of the operational data, which means no ETL, reduced architectural complexity, and cost savings. MongoDB’s native vector search uses the uniform MongoDB API which implies it can be combined with other MongoDB Query API constructs to enable pre-filtering and post-filtering. MongoDB Atlas is designed for reliability, scalability, and security which are of prime importance when it comes to generative AI applications. Enabling easy access to MongoDB data via Azure OpenAI Service makes RAG implementations possible for even non-developers.

The diagram below shows the RAG architecture implemented through this integration. The user’s question is converted to embeddings using an embedding model such as “text-embedding-ada-002.” In the retrieval stage, the embeddings pertaining to the user query are used for a vector search in MongoDB Atlas to get similar chunks from the MongoDB Atlas collection. In the Augmented stage, these chunks retrieved from MongoDB Atlas are added to the actual user query as context to create a prompt for the LLM. In the Generation stage, the LLM is invoked passing it the augmented prompt, and now the LLM answers to the query grounded in the context provided in the prompt. The result is that now, the LLM provides accurate and relevant answers. Refer to the citations to see what data was retrieved from MongoDB Atlas that led to the answer provided by the LLM.

MongoDB Atlas with Azure OpenAI Service: A seamless experience

The integration is available as a REST API that can be invoked from your applications like any other REST API. Developers need to add the MongoDB collection and vector index information in the payload specifications along with the prompt and the system message/role information while invoking the API. The API will return the response along with the citations which point to the chunks retrieved from the MongoDB vector database along with the metadata of the chunk for easy cross verification.

The integration is accessible from the chat playground in AI Foundry. It's a few clicks wizard experience to point the LLM to use the data from MongoDB Atlas collection while responding to the queries, providing for enhanced AI experience. MongoDB Atlas is available in the drop-down menu in the “Add your data” experience in the portal’s chat playground. Users just need to select MongoDB Atlas and point to the Atlas vector index to leverage the robust vector search capability of MongoDB Atlas and equip the LLMs with the precise information required to answer the queries. This makes creating chatbots and copilots for internal applications or customer-facing portals extremely easy.

Watch the video to see a demo of the integration in Azure AI Foundry and witness how easy it is now to bring your valuable data in MongoDB Atlas to your LLMs to build elegant conversational experiences.

Refer to the Microsoft official documentation for more details on the integration.