Overview

If you need to make changes to an object schema that is used in Atlas Device Sync, you can make non-breaking without any additional work. Breaking changes, however, require additional steps. A breaking or destructive change includes renaming an existing field or changing a field's data type.

For more information, see Update Your Data Model.

If you need to make a breaking schema change, you have two choices:

Terminate sync in the backend and then re-enable it from the start.

Create a partner collection, copy the old data to this new collection, and set up triggers to ensure data consistency.

The remainder of this guide leads you through creating a partner collection.

Warning

Restore Sync after Terminating Sync

When you terminate and re-enable Atlas Device Sync, clients can no longer Sync. Your client must implement a client reset handler to restore Sync. This handler can discard or attempt to recover unsynchronized changes.

Partner Collections

In the following procedure, the initial collection uses the JSON Schema below

for a Task collection. Note that the schema for the Task contains an

_id field of type objectId:

{ "title": "Task", "bsonType": "object", "required": [ "_id", "name" ], "properties": { "_id": { "bsonType": "objectId" }, "_partition": { "bsonType": "string" }, "name": { "bsonType": "string" } } }

The new schema is the same, except we want the _id field to be a string:

{ "title": "Task", "bsonType": "object", "required": [ "_id", "name" ], "properties": { "_id": { "bsonType": "string" }, "_partition": { "bsonType": "string" }, "name": { "bsonType": "string" } } }

Procedure

Initialize Your Partner Collection with an Aggregation Pipeline

Since breaking changes cannot be performed directly on a synced object schema, you must create a partner collection with a schema containing the required changes. You must ensure that the partner collection has the same data as the original collection so that newer clients can synchronize with older clients.

The recommended approach to copying the data from your original collection to the new partner collection is to use the Aggregation Framework.

You can create and run an aggregation pipeline from the mongo shell, by using the /aggregation-pipeline-builder/, or with the /data-explorer/cloud-agg-pipeline/.

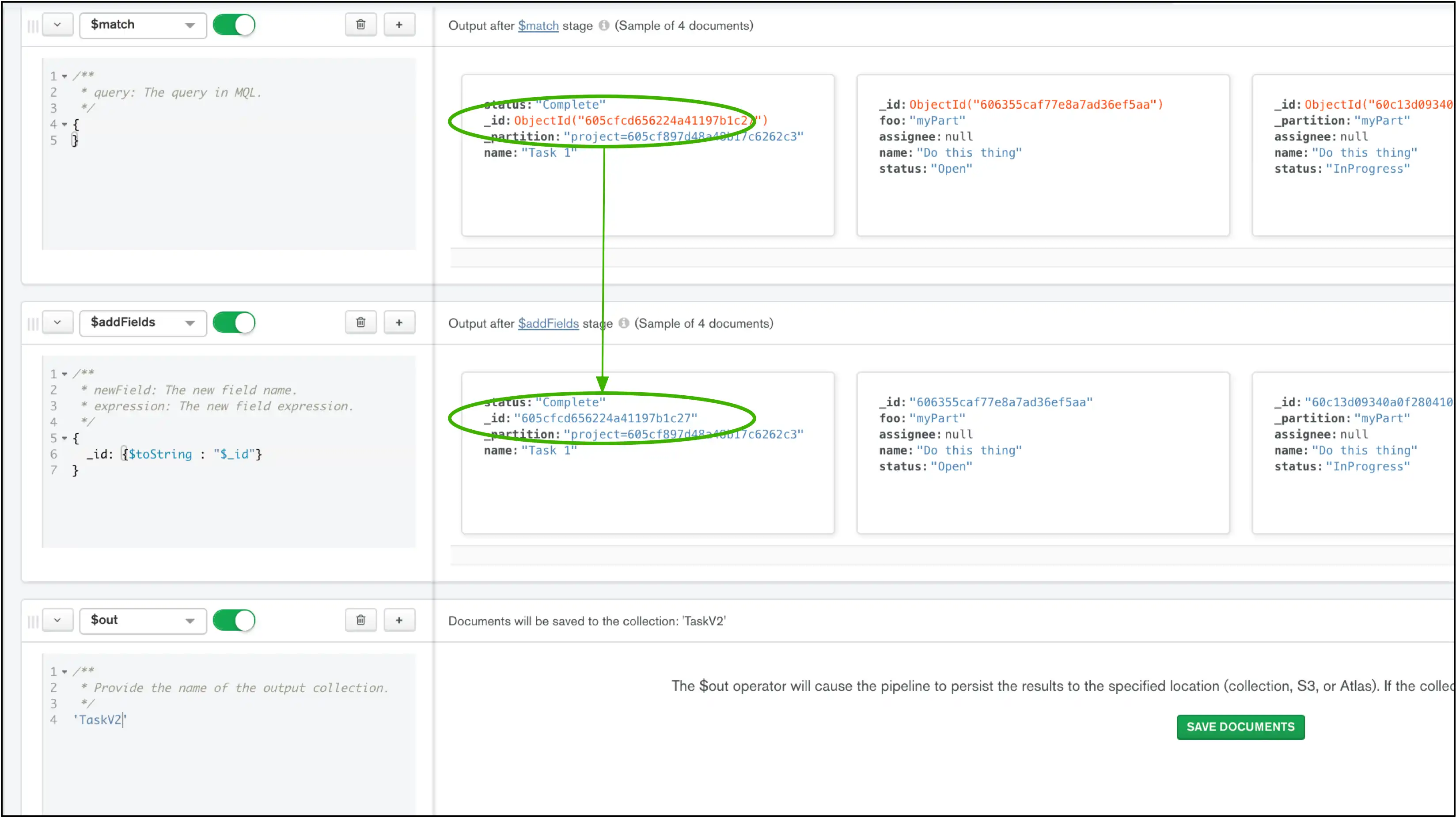

The pipeline will have the following stages:

Match all the documents in the initial collection by passing an empty filter to the $match operator.

Modify the fields of the initial collection by using an aggregation pipeline operator. In the following example, the data is transformed using the $addFields operator. The

_idfield is transformed to astringtype with the $toString operator.Write the transformed data to the partner collection by using the $out operator and specifying the partner collection name. In this example, we wrote the data to a new collection named

TaskV2.

Here the same pipeline as represented in the Atlas and Compass UIs. Note that

both of these tools provide a preview of the changes; in this case, the

conversion the _id field from an ObjectId to a string:

The following example shows the complete aggregation pipeline as it would look if you used mongosh to do the conversion:

use "<database-name>" // switch the current db to the db that the Task collection is stored in collection = db.Task; collection.aggregate([ { $match: {} }, // match all documents in the Task collection { $addFields: { // transform the data _id: { $toString: "$_id" }, // change the _id field of the data to a string type }, }, { $out: "TaskV2" }, // output the data to a partner collection, TaskV2 ]);

Set up Database Triggers for Your Partner Collections

Once your partner collection is set up, you can use it to read existing data. However, any new writes to the data of either collection will not be in the other collection. This causes the old clients to be out of sync with the new clients.

To ensure that data is reflected in both collections, you set up a database trigger on each collection. When data is written to one collection, the trigger's function performs the write to the partner collection.

Follow the steps in the database trigger

documentation to create a trigger that copies data from the Task collection to

the TaskV2 collection for all operation types. Repeat these steps to create

a second trigger that copies data from the TaskV2 collection to the

Task collection.

Add Trigger Functions

Triggers require backing functions that run when the trigger fires. In this case, we need to create two functions: a forward-migration function and a reverse-migration function.

The forward migration trigger listens for inserts, updates, and deletes in the Task collection, modifies them to reflect the TaskV2 collection's schema, and then applies them to the TaskV2 collection.

To listen for changes to the TaskV2 collection and apply them to the Task collection, write a reverse-migration function for the TaskV2 collection's trigger. The reverse migration follows the same idea as the previous step.

In the forward-migration function, we check which operation has triggered the

function: if the operation type is Delete (meaning a document

has been deleted in the Task collection), the document is also deleted in the

TaskV2 collection. If the operation type is a Write (inserted or modified)

event, an aggregation pipeline is created. In the pipeline, the inserted or

modified document in the Task collection is extracted using the

$match operator. The

extracted document is then transformed to adhere to the

TaskV2 collection's schema. Finally, the transformed data is written to the

TaskV2 collection by using the

$merge operator:

exports = function (changeEvent) { const db = context.services.get("mongodb-atlas").db("ExampleDB"); const collection = db.collection("Task"); // If the event type is "invalidate", the next const throws an error. // Return early to avoid this. if (!changeEvent.documentKey) { return; } // The changed document's _id as an integer: const changedDocId = changeEvent.documentKey._id; // If a document in the Task collection has been deleted, // delete the equivalent object in the TaskV2 collection: if (changeEvent.operationType === "delete") { const tasksV2Collection = db.collection("TaskV2"); // Convert the deleted document's _id to a string value // to match TaskV2's schema: const deletedDocumentID = changedDocId.toString(); return tasksV2Collection.deleteOne({ _id: deletedDocumentID }) } // A document in the Task collection has been created, // modified, or replaced, so create a pipeline to handle the change: const pipeline = [ // Find the changed document data in the Task collection: { $match: { _id: changeEvent.documentKey._id } }, { // Transform the document by changing the _id field to a string: $addFields: { _id: { $toString: "$_id" }, }, }, // Insert the document into TaskV2, using the $merge operator // to avoid overwriting the existing data in TaskV2: { $merge: "TaskV2" }] return collection.aggregate(pipeline); };

The reverse-migration function goes through similar steps as the example in the

prior step. If a document has been deleted in one collection, the document is

also deleted in the other collection. If the operation type is a write event,

the changed document from TaskV2 is extracted, transformed to match the

Task collection's schema, and written into the Task collection:

exports = function (changeEvent) { const db = context.services.get("mongodb-atlas").db("ExampleDB"); const collection = db.collection("TaskV2"); // If the event type is "invalidate", the next const throws an error. // Return early to avoid this. if (!changeEvent.documentKey) { return; } // The changed document's _id as a string: const changedDocId = changeEvent.documentKey._id; // If a document in the TaskV2 collection has been deleted, // delete the equivalent object in the Task collection if (changeEvent.operationType === "delete") { const taskCollection = db.collection("Task"); // Convert the deleted document's _id to an integer value // to match Task's schema: const deletedDocumentID = parseInt(changedDocId); return taskCollection.deleteOne({ _id: deletedDocumentID }) } // A document in the Task collection has been created, // modified, or replaced, so create a pipeline to handle the change: const pipeline = [ // Find the changed document data in the Task collection { $match: { _id: changedDocId } }, { // Transform the document by changing the _id field $addFields: { _id: { $toInt: "$_id" }, }, }, { $merge: "Task" } ] return collection.aggregate(pipeline); };

Development Mode and Breaking Changes

Applies to App Services Apps created after September 13, 2023.

App Services Apps in Development Mode that were created after September 13, 2023 can make breaking changes from their client code to synced object schemas.

Refer to Development Mode for details about making breaking changes in Development Mode.

Development Mode is not suitable for production use. If you use Development Mode, make sure to disable it before moving your app to production.