- Use cases: Gen AI, Fraud Prevention

- Industries: Financial Services, Insurance, Retail

- Products and tools: Atlas, Atlas Clusters, Change Streams, Atlas Triggers, Spark Streaming Connector

- Partners: Databricks

Real-time card fraud solution accelerator

Solution Overview

In this solution, you'll learn how easy it is to build an ML-based fraud solution using MongoDB and Databricks. The solution's key features include data completeness through integration with external sources, real-time processing for timely fraud detection, AI/ML modeling to identify potential fraud patterns, real-time monitoring for instant analysis, model observability for full visibility into fraud behaviors, flexibility, scalability, and robust security measures. The system aims to facilitate ease of operation and foster collaboration between application development and data science teams. Furthermore, it supports end-to-end CI/CD pipelines to ensure up-to-date and secure systems.

Existing Challenges:

- Incomplete data visibility from legacy systems: Lack of access to relevant data sources hampers fraud pattern detection.

- Latency issues in fraud prevention systems: Legacy systems lack real-time processing, causing delays in fraud detection.

- Difficulty in adapting legacy systems: Inflexibility hinders the adoption of advanced fraud prevention technologies.

- Weak security protocols in legacy systems: Outdated security exposes vulnerabilities to cyber attacks.

- Operational challenges due to technical sprawl: Diverse technologies complicate maintenance and updates.

- High operation costs of legacy systems: Costly maintenance limits budget for fraud prevention.

- Lack of collaboration between teams: Siloed approach leads to delayed solutions and higher overhead.

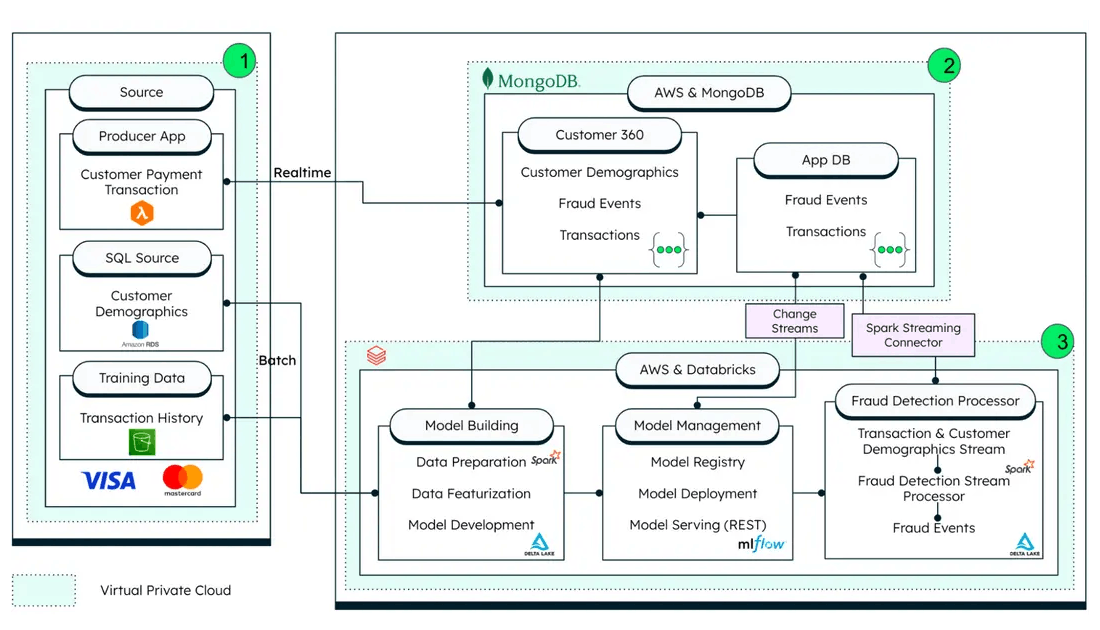

Reference Architecture

The ML-based fraud solution is suitable for industries where real-time processing, AI/ML modeling, model observability, flexibility, and collaboration between teams are absolutely essential. The system ensures up-to-date and secure operations through end-to-end CI/CD pipelines. Relevant Industries include:

- Financial Services - Fraud detection in transactions

- E-commerce - Fraud detection in orders

- Healthcare and Insurance - Fraud detection in claims

Data Model Approach

As you can see from the domain diagram, there are three entities when working with credit card transactions: the transaction itself, the merchant, and payer involved in the transaction. Since all three are important and accessed together in our fraud detection application, we use the extended reference pattern and include fields about the transaction, merchant, and payer in a single document.

Building the Solution

The functional features listed above can be implemented by a few architectural components. These include:

- Data sourcing

- Producer apps: The producer mobile app simulates the generation of live transactions.

- Legacy data source: The SQL external data source is used for customer demographics.

- Training data: Historical transaction data needed for model training is sourced from cloud object storage - Amazon S3 or Microsoft Azure Blob Storage.

- MongoDB Atlas: Serves as the Operational Data Store (ODS) for card transactions and processes transactions in real-time. The solution leverages the MongoDB Atlas aggregation framework to perform in-app analytics and process transactions based on pre-configured rules. It also communicates with Databricks for advanced AI/ML-based fraud detection via a native Spark connector.

- Databricks: Hosts the AI/ML platform to complement MongoDB Atlas in-app analytics. A fraud detection algorithm used in this example is a notebook inspired by Databrick's fraud framework MLFlow and it has been used to manage the MLOps for managing this model. The trained model is exposed as a REST endpoint.

Now, let’s break down these architectural components in greater detail below, one by one.

Data sourcing

The first step in implementing a comprehensive fraud detection solution is aggregating data from all relevant data sources. As shown in Figure 1 above, an event-driven federated architecture is used to collect and process data from real-time sources such as producer apps, batch legacy systems data sources such as SQL databases, and historical training data sets from offline storage. This approach enables data sourcing from various facets such as transaction summary, customer demography, merchant information, and other relevant sources, ensuring data completeness.

Additionally, the proposed event-driven architecture provides the following benefits:

- Real-time transaction data unification, which allows for the collection of card transaction event data such as transaction amount, location, time of the transaction, payment gateway information, and payment device information in real time.

- Helps re-train monitoring models based on live event activity to combat fraud as it happens.

The producer application for the demonstration is a Python script that generates live transaction information at a predefined rate (transactions/sec, which is configurable).

MongoDB for event-driven, shift-left analytics architecture

MongoDB Atlas is a managed developer data platform that offers several features that make it the perfect choice as the datastore for card fraud transaction classification. It supports flexible data models and can handle various types of data, high scalability to meet demand, advanced security features to support compliance with regulatory requirements, real-time data processing for fast and accurate fraud detection, and cloud-based deployment to store data closer to customers and comply with local data privacy regulations.

The MongoDB Spark Streaming Connector integrates Apache Spark and MongoDB. Apache Spark, hosted by Databricks, allows the processing and analysis of large amounts of data in real-time. The Spark Connector translates MongoDB data into Spark data frames and supports real-time Spark streaming.

The App Services features offered by MongoDB allow for real-time processing of data through change streams and triggers. Because MongoDB Atlas is capable of storing and processing various types of data as well as streaming capabilities and trigger functionality, it is well suited for use in an event-driven architecture.

This solution uses the rich connector ecosystem of MongoDB and App Services to process transactions in real-time. The App Service Trigger function is used by invoking a REST service call to an AI/ML model hosted through the Databricks MLflow framework.

The example solution manages rules-based fraud prevention by storing user-defined payment limits and information in a user settings collection, as shown. This includes maximum dollar limits per transaction, the number of transactions allowed per day, and other user-related details. By filtering transactions based on these rules before invoking expensive AI/ML models, the overall cost of fraud prevention is reduced.

Databricks as an AI/ML ops platform

Databricks is a powerful AI/ML platform to develop models for identifying fraudulent transactions. One of the key features of Databricks is the support of real-time analytics. As discussed above, real-time analytics is a key feature of modern fraud detection systems.

Databricks includes MLFlow, a powerful tool for managing the end-to-end machine learning lifecycle. MLFlow allows users to track experiments, reproduce results, and deploy models at scale, making it easier to manage complex machine learning workflows. MLFlow offers model observability, which allows for easy tracking of model performance and debugging. This includes access to model metrics, logs, and other relevant data, which can be used to identify issues and improve the accuracy of the model over time. Additionally, these features can help in the design of modern fraud detection systems using AI/ML.

Technologies and Products Used

MongoDB Atlas Developer Data Platform

Partner Technologies

Key Considerations

The proposed solution's functional and nonfunctional features include:

- Data completeness: Integrated with external sources for accurate data analysis

- Real-time processing: Enables timely detection of fraudulent activities

- AI/ML modeling: Identifies potential fraud patterns and behaviors

- Real-time monitoring: Allows instant data processing and analysis

- Model observability: Ensures full visibility into fraud patterns

- Flexibility and scalability: Accommodates changing business needs

- Robust security measures: Protects against potential breaches

- Ease of operation: Reduces operational complexities

- Application and data science team collaboration: Aligns goals and cooperation

- End-to-end CI/CD pipeline support: Ensures up-to-date and secure systems

Authors

- Shiv Pullepu, MongoDB

- Luca Napoli, MongoDB

- Ashwin Gangadhar, MongoDB

- Rajesh Vinayagam, MongoDB

GitHub Repository: Card Fraud

Create this solution yourself with the associated sample data, functions, and code.

Credit Card Fraud Walkthrough Demo

Watch the creation of a credit fraud detection solution and view it in a real-time demo.

MongoDB for Financial Services

Learn how MongoDB’s developer data platform supports a wide range of use cases in the financial services industry.

Fraud prevention with MongoDB

Analyze and detect fraud in real time and satisfy Know Your Customer (KYC) requirements.