Early forms of data analysis required carefully structured datasets. Nowadays, however, users and businesses are generating more unstructured data, which is huge and unformatted. As a result, traditional techniques are no longer sufficient to analyze unstructured data.

Read on to learn more about unstructured data and the techniques used to analyze it.

What is unstructured data analysis?

Unstructured data is data that doesn’t have a fixed form or structure. Images, videos, audio files, text files, social media data, geospatial data, data from IoT devices, and surveillance data are examples of unstructured data. About 80%-90% of data is unstructured. Businesses process and analyze unstructured data for different purposes, like improving operations and increasing revenue.

Unstructured data analysis is complex and requires specialized techniques, unlike structured data, which is straightforward to store and analyze.

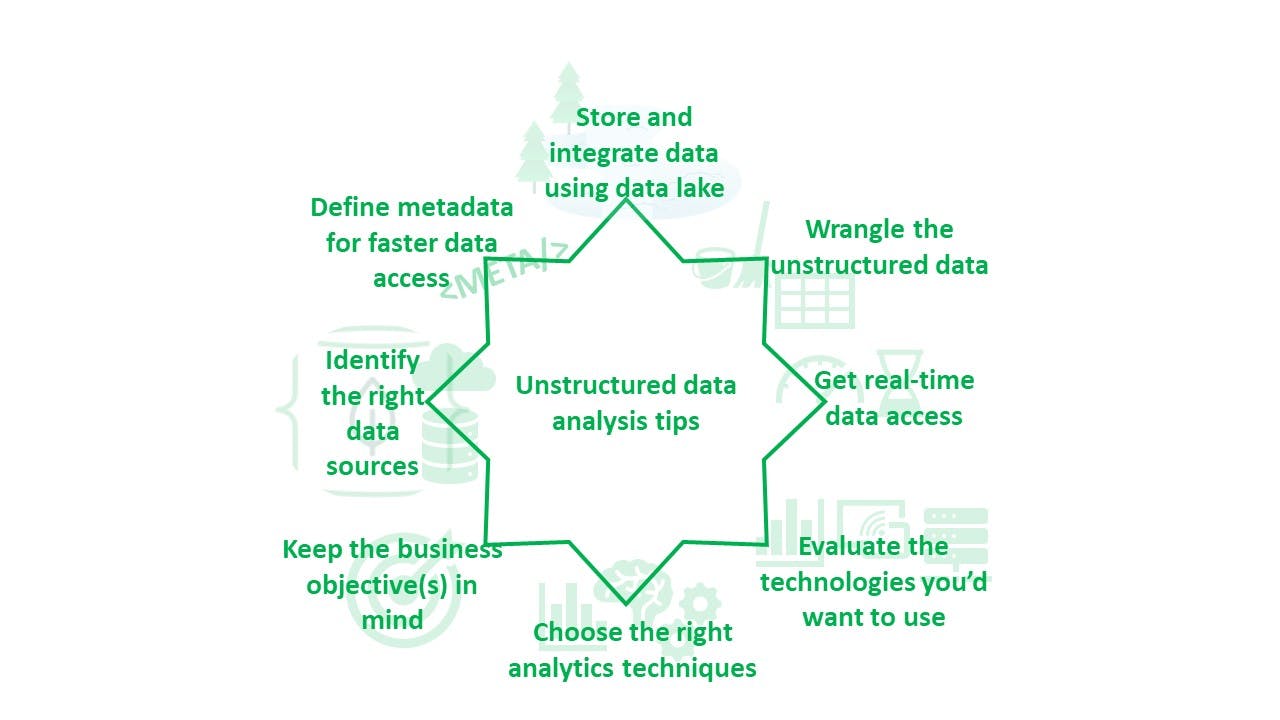

Here is a quick glance at all of the unstructured data analysis techniques and tips discussed in this article:

- Keep the business objective(s) in mind

- Define metadata for faster data access

- Choose the right analytics techniques

- Exploratory data analysis techniques

- Qualitative data analysis techniques

- Artificial Intelligence (AI) and Machine Learning (ML) techniques

- Identify the right data sources

- Evaluate the technologies you’d want to use

- Get real-time data access

- Store and integrate data using data lake

- Wrangle the data to get the desired features

In the next few sections, we’ll discuss the various unstructured data analysis techniques and tips, challenges in handling unstructured data, and suggestions for overcoming these challenges.

Tips for unstructured data analytics

Before applying unstructured data analysis techniques, businesses need to prepare the data and make it usable for generating insights. These tips will help manage your unstructured data in a better way:

Keep the business objective(s) in mind

The analytical techniques you choose should match up to your business objectives. Let’s say the objective is to identify a face in an image. An image is a type of unstructured data. You need some features for mapping—face shape, eye color, width of the mouth, and so on. Businesses can store the values associated with these features in a flexible semi-structured format, like a JSON document, for analysis. You can use MongoDB for this type of data storage and processing.

Define metadata for faster data access

Metadata stores information about data. Using metadata, an analyst can quickly find data related to their organization or business objectives.

Suppose you have a thousand documents of 10 thousand words or more each. The documents are housed in a storage system along with a large file (metadata file) that contains information about all the documents. Metadata could include information like table of contents, title, author, creation date, tags, or number of words for each document.

If you want to find a particular document, one option is to scan through all one thousand documents to identify the document you are looking for—not so performant.

Another option is to look through the metadata file and get the exact document location. This option gives you faster access to the right document.

Since metadata describes data, you can identify the common types of data you store and find metadata opportunities. This will help efficiently manage your unstructured data (like the above text documents) in your storage environment.

Choose the right analytics techniques

When analyzing unstructured data, it is important to select the right techniques depending on the intent of the unstructured data analysis. Here are two ways you might apply different techniques for different types of situations:

- To detect a theft in your area using CCTV footage, you would need to apply advanced deep learning techniques like object recognition, face analysis, and crowd analysis.

- To find out the average number of milk cartons bought by families in a particular gated community, you can apply simple quantitative analysis and group the residents based on a certain criteria like 0, 1-3, 4-6, >6.

You will learn more about the different unstructured data analysis techniques in the next section.

Identify the right data sources

Unstructured data often comes from multiple sources. It is important to select reliable and relevant data sources for data collection.

For example, a single user may generate data from social media, IoT devices, recording devices, and so on. Analysts need to identify whether they need data from all or few sources to get the right data they need for analysis. This way, they can store only the relevant data for querying and gathering insights.

Many companies use data lakes to unify data from multiple sources.

Evaluate the technologies you’d want to use

Choose the tools that provide scalability, availability, and query capabilities for your particular use case. Some tools provide advanced data analytics techniques. These tools often come with a higher price tag and management overhead, so make an informed choice from the available analytics technologies. Some key technologies that enable unstructured big data analytics for businesses are predictive analytics, NoSQL databases, stream analytics, and data integration.

Get real-time data access

For real-time analytics, it is necessary to have access to new data in real time. For example, fraud prevention or personalized offers are more valuable when fraudulent activity is happening or a customer is still shopping, respectively.

With MongoDB, you can capture refined datasets from multiple data sources. You can also combine, enrich, and analyze multi-model data (i.e., semi-structured, unstructured, and geospatial data) within the database, delivering action-driven and real-time insights with speed and simplicity.

Store and integrate data using data lakes

Data lakes unify and store unstructured data from many sources in its native format.

Wrangle the unstructured data

Before applying unstructured data analysis techniques, make sure the data is clean and all the valuable information is present. If there is a lot of noise in the data, the insights will not be accurate.

Unstructured data analysis techniques

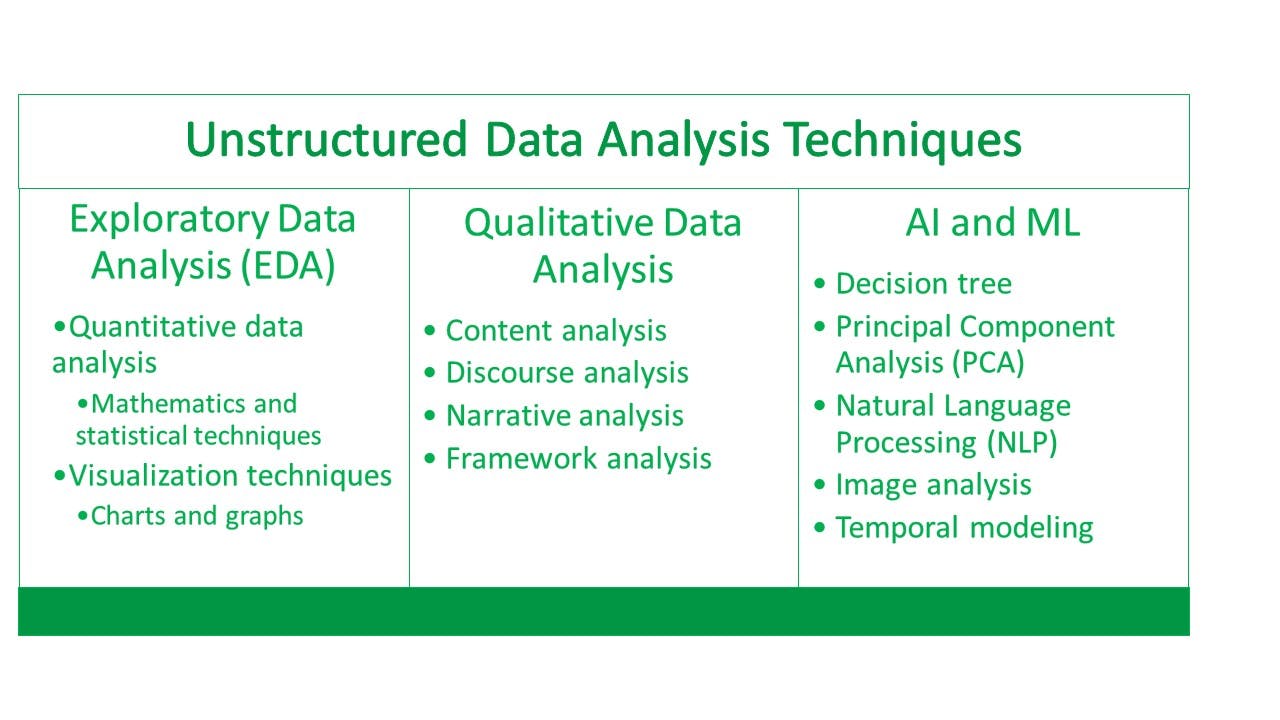

Once you have considered the above tips and have all the data ready for analysis, you can use one or more of the unstructured data analytics techniques below:

- Perform exploratory data analysis for quantitative data

- Apply qualitative data analysis techniques for qualitative data

- Use AI and ML techniques for advanced analytics

Let’s learn more about the above techniques:

Exploratory Data Analysis

Exploratory Data Analysis (EDA) is a set of initial investigations done to identify the main characteristics of data. It is done using summary statistics and graphics. EDA includes multiple techniques, including:

Quantitative data analysis

Quantitative data analysis techniques give discrete values and results. These techniques include mathematical and statistical analysis like finding the mean, correlations, range, standard deviation, labeling data (classification), regression analysis techniques, cluster analysis, text analytics, keyword search, and hypothesis testing using random sample data. The MongoDB aggregation framework provides rich capabilities for quantitative analysis. You can also use unstructured data analysis tools like R/Python for advanced unstructured data analytics for data stored in MongoDB.

Visualization techniques

Exploratory data analysis often uses visual methods to uncover relationships between the data variables. You can easily identify patterns and eliminate outliers and anomalies. Some popular techniques are dimensionality reduction, graphical techniques like multivariate charts, histogram, box plots, and more. For example, flow maps can show how many people travel to and from New York City per day. Pie charts are a great way to explore data distributions across various categories, including which age groups of people like to read books or watch TV and so on. MongoDB Charts presents a unified view of all your MongoDB Atlas data and quickly provides rich visual insights.

Qualitative data analysis

Qualitative data analysis mainly applies for unstructured text data. This can include documents, surveys, interview transcripts, social media content, medical records, and sometimes audio and video clips, as well. These techniques need reasoning, contextual understanding, social intelligence, and intuition rather than a mathematical formula (like in quantitative analysis). Content analysis, discourse analysis, and narrative analysis are some types of qualitative analysis. There are two approaches for qualitative data analysis:

- Inductive—Data analysts and researchers already have a theory and collect data and facts to accurately validate and prove the theory.

- Deductive—Data analysts have the data in hand, and derive insights from the data to develop a theory.

AI and ML

AI and ML unstructured data analysis techniques include decision trees, Principal Component Analysis (PCA), Natural Language Processing (NLP), artificial neural networks, image analysis, temporal modeling techniques, market segmentation analysis, and more. These techniques help with predictive analytics and uncovering the data insights. Suppose you ordered a shipment of 100 bicycles, and want to track its delivery status at different times—temporal modeling techniques will do that for you! Similarly, to know how people are reacting to your new ad campaign—positive or negative—use sentiment analysis (NLP technique). MongoDB is a great choice for training ML models because of its flexible data model.

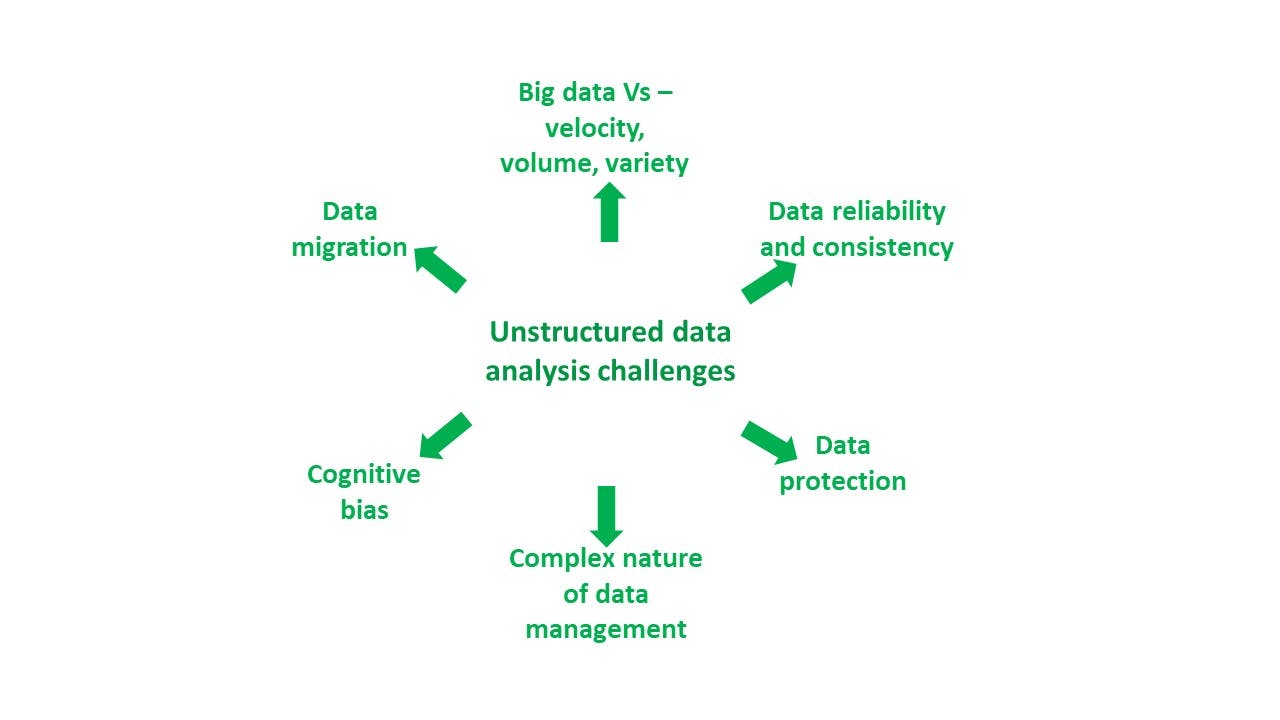

Unstructured data analysis challenges

Unstructured data analysis has a potential to generate huge business insights. However, traditional storage and analysis techniques are not sufficient to handle unstructured data. Here are some of the challenges that companies face in analyzing unstructured data:

- Big data characteristics: volume, velocity, variety

- Data reliability and consistency

- Data protection

- Complex nature of data management

- Data migration

- Cognitive bias

Big data characteristics

The volume, variety, and velocity of big data pose a big challenge for organizations performing unstructured data analysis, as about 80%-90% of big data is unstructured. Big data is ever growing. Facebook gets about a billion or more engagements per day, a person can do many financial transactions on a single day, a YouTube video becomes viral within a few seconds, and so on. Collection, cleaning, and storage requirements increase multifold, which can rapidly become unmanageable.

Data reliability and consistency

Data can come from multiple sources, including social media, forums, surveys, and so on. The data collected from these sources may or may not be reliable and consistent, which poses a challenge for accurate analysis. For instance, people may intentionally or unintentionally put incorrect information on their social media profiles. Similarly, they may fail to update the latest information in all information systems. This would mean one system has the correct information but the other will not. To increase data reliability and consistency, focus on improved data collection and data wrangling.

Data protection

Since data is at the heart of all analytics, data needs protection. Personal information can be misused or accidentally shared by someone within or outside an organization. In addition, with unethical hackers and cybercriminals trying to break every system and steal information, protecting data at each step can become complicated.

MongoDB Atlas provides security features like authentication, encryption, and authorization at different levels to protect data.

Complex nature of data management

Traditional systems are unable to handle all the unstructured data coming in, because the data has varied formats and speed. Accessing this kind of data, which has no consistent format, can be time-consuming and requires skilled resources to query and transform data into a usable format. The raw data might also have many duplicates, nulls, outliers, etc. Further, we need systems that can easily scale up as the data grows in size. These factors make data management complex.

You can overcome this challenge through a solid data management strategy that provides better query performance, accuracy, automated database capabilities, and scalability.

Data migration

Migration of data between two storage systems is a herculean task, especially with highly sensitive data. Some challenges that businesses face during data migration are loss of data, hardware challenges, and lack of knowledge of legacy and new systems. Best practices to mitigate these challenges include backing up the data, and doing a lot of testing throughout the implementation and maintenance phases.

MongoDB makes the data migration process easy through the Live Migration service and a host of database tools.

Cognitive bias

Cognitive bias refers to systematic errors in the way humans perceive and interpret certain pieces of information. People might not share or accept information that does not match with their personal views or beliefs, leading to inaccurate data collection and processing. For example, analysts may rely only on few data sources, while missing new sources that might potentially improve the analysis results.

You can try to reduce cognitive bias by educating the data analysis teams to be aware of the different types of biases, so that they can build more accurate models.

Conclusion

With unstructured data exponentially growing, analysts have to find reliable ways to tap and analyze the data and make informed business decisions. There are many advanced unstructured data analysis techniques that are helping organizations follow a data-driven approach and enhance their business processes and revenue. Businesses can perform advanced unstructured data analytics with MongoDB’s Application Data Platform, helping with reporting, real-time analytics, AI and ML, data lakes, and much more.

FAQs

What is unstructured data analysis?

How do you analyze unstructured data?

Can AI analyze unstructured data?

How does unstructured data look?

How do you structure unstructured data?

The first step to structure unstructured data is to clean the data by removing duplicates, outliers, and other non-relevant entries. The next step is to identify the features that will help solve the business problem at hand and organize these features into a format. You can then apply the different data preparation techniques.

For example, if you want to structure lots of text data, categorize and structure the data with techniques like tokenization, stemming, lemmatization, etc. Similarly, if you have an image in hand, you can structure the data based on features like image size, pixels, face description, color, quality, etc.