MongoDB Atlas Best Practices: Part 3

Scaling your MongoDB Atlas Deployment, Delivering Continuous Application Availability

MongoDB Atlas radically simplifies the operation of MongoDB. As with any hosted database as a service there are still decisions you need to take to ensure the best performance and availability for your application. This blog series provides a series of recommendations that will serve as a solid foundation for getting the most out of the MongoDB Atlas service.

We’ll cover four main areas over this series of blog posts:

- In part 1, we got started by preparing for our deployment, focusing specifically on schema design and application access patterns.

- In part 2, we discussed additional considerations as you prepare for your deployment, including indexing, data migration and instance selection.

- In this part 3 post, we are going dive into how you scale your MongoDB Atlas deployment, and achieve your required availability SLAs.

- In the final part 4, we’ll wrap up with best practices for operational management and ensuring data security.

If you want to get a head start and learn about all of these topics now, just go ahead and download the MongoDB Atlas Best Practices guide.

Scaling a MongoDB Atlas Cluster

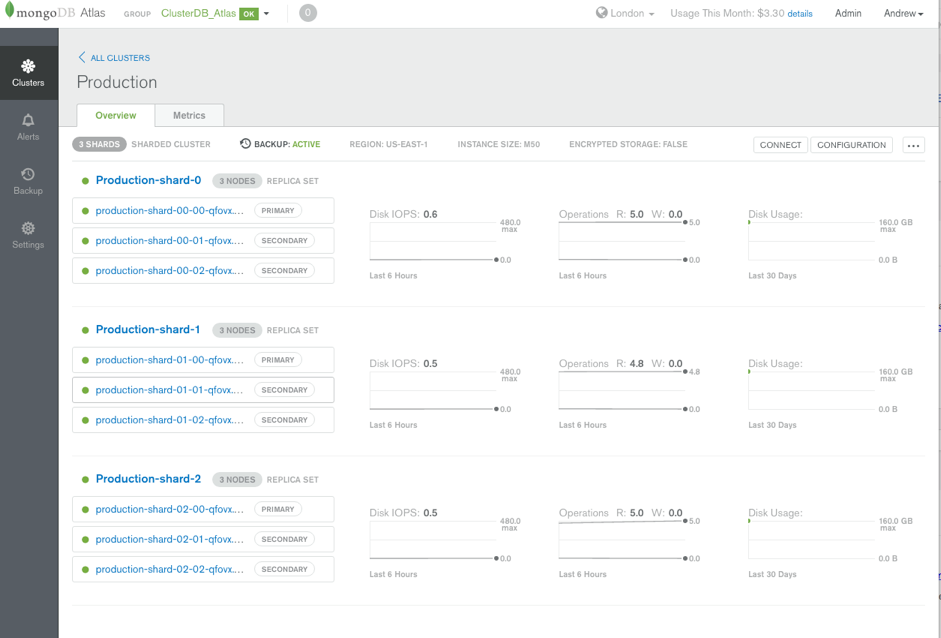

Horizontal Scaling with Sharding

MongoDB Atlas provides horizontal scale-out for databases using a technique called sharding, which is transparent to applications. MongoDB distributes data across multiple Replica Sets called shards. With automatic balancing, MongoDB ensures data is equally distributed across shards as data volumes grow or the size of the cluster increases or decreases. Sharding allows MongoDB deployments to scale beyond the limitations of a single server, such as bottlenecks in RAM or disk I/O, without adding complexity to the application.

MongoDB Atlas supports three types of sharding policy, enabling administrators to accommodate diverse query patterns:

- Range-based sharding: Documents are partitioned across shards according to the shard key value. Documents with shard key values close to one another are likely to be co-located on the same shard. This approach is well suited for applications that need to optimize range-based queries.

- Hash-based sharding: Documents are uniformly distributed according to an MD5 hash of the shard key value. Documents with shard key values close to one another are unlikely to be co-located on the same shard. This approach guarantees a uniform distribution of writes across shards – provided that the shard key has high cardinality – making it optimal for write-intensive workloads.

- Location-aware sharding: Documents are partitioned according to a user-specified configuration that "tags" shard key ranges to physical shards residing on specific hardware.

Users should consider deploying a sharded MongoDB Atlas cluster in the following situations:

- RAM Limitation: The size of the system's active working set plus indexes is expected to exceed the capacity of the maximum amount of RAM in the provisioned instance.

- Disk I/O Limitation: The system will have a large amount of write activity, and the operating system will not be able to write data fast enough to meet demand, or I/O bandwidth will limit how fast the writes can be flushed to disk.

- Storage Limitation: The data set will grow to exceed the storage capacity of a single node in the system.

Applications that meet these criteria, or that are likely to do so in the future, should be designed for sharding in advance rather than waiting until they have consumed available capacity. Applications that will eventually benefit from sharding should consider which collections they will want to shard and the corresponding shard keys when designing their data models. If a system has already reached or exceeded its capacity, it will be challenging to deploy sharding without impacting the application's performance.

Between 1 and 12 shards can be configured in MongoDB Atlas.

Sharding Best Practices

Users who choose to shard should consider the following best practices.

Select a good shard key: When selecting fields to use as a shard key, there are at least three key criteria to consider:

- Cardinality: Data partitioning is managed in 64 MB chunks by default. Low cardinality (e.g., a user's home country) will tend to group documents together on a small number of shards, which in turn will require frequent rebalancing of the chunks and a single country is likely to exceed the 64 MB chunk size. Instead, a shard key should exhibit high cardinality.

- Insert Scaling: Writes should be evenly distributed across all shards based on the shard key. If the shard key is monotonically increasing, for example, all inserts will go to the same shard even if they exhibit high cardinality, thereby creating an insert hotspot. Instead, the key should be evenly distributed.

- Query Isolation: Queries should be targeted to a specific shard to maximize scalability. If queries cannot be isolated to a specific shard, all shards will be queried in a pattern called scatter/gather, which is less efficient than querying a single shard.

- Ensure uniform distribution of shard keys: When shard keys are not uniformly distributed for reads and writes, operations may be limited by the capacity of a single shard. When shard keys are uniformly distributed, no single shard will limit the capacity of the system.

For more on selecting a shard key, see Considerations for Selecting Shard Keys.

Avoid scatter-gather queries: In sharded systems, queries that cannot be routed to a single shard must be broadcast to multiple shards for evaluation. Because these queries involve multiple shards for each request they do not scale well as more shards are added.

Use hash-based sharding when appropriate: For applications that issue range-based queries, range-based sharding is beneficial because operations can be routed to the fewest shards necessary, usually a single shard. However, range-based sharding requires a good understanding of your data and queries, which in some cases may not be practical. Hash-based sharding ensures a uniform distribution of reads and writes, but it does not provide efficient range-based operations.

Apply best practices for bulk inserts: Pre-split data into multiple chunks so that no balancing is required during the insert process. For more information see Create Chunks in a Sharded Cluster in the MongoDB Documentation.

Add capacity before it is needed: Cluster maintenance is lower risk and more simple to manage if capacity is added before the system is over utilized.

Continuous Availability & Data Consistency

Data Redundancy

MongoDB maintains multiple copies of data, called replica sets, using native replication. Replica failover is fully automated in MongoDB, so it is not necessary to manually intervene to recover nodes in the event of a failure.

A replica set consists of multiple replica nodes. At any given time, one member acts as the primary replica and the other members act as secondary replicas. If the primary member fails for any reason (e.g., a failure of the host system), one of the secondary members is automatically elected to primary and begins to accept all writes; this is typically completed in 2 seconds or less and reads can optionally continue on the secondaries.

Sophisticated algorithms control the election process, ensuring only the most suitable secondary member is promoted to primary, and reducing the risk of unnecessary failovers (also known as "false positives"). The election algorithm processes a range of parameters including analysis of histories to identify those replica set members that have applied the most recent updates from the primary and heartbeat and connectivity status.

A larger number of replica nodes provide increased protection against database downtime in case of multiple machine failures. A MongoDB Atlas replica set can be configured with 3, 5, or 7 replicas. Replica set members are deployed across availability zones to avoid the failure of a data center interrupting service to the MongoDB Atlas cluster.

More information on replica sets can be found on the Replication MongoDB documentation page.

Write Guarantees

MongoDB allows administrators to specify the level of persistence guarantee when issuing writes to the database, which is called the write concern. The following options can be selected in the application code:

- Write Acknowledged: This is the default write concern. The

mongodwill confirm the execution of the write operation, allowing the client to catch network, duplicate key, Document Validation, and other exceptions - Journal Acknowledged: The

mongodwill confirm the write operation only after it has flushed the operation to the journal on the primary. This confirms that the write operation can survive amongodcrash and ensures that the write operation is durable on disk - Replica Acknowledged: It is also possible to wait for acknowledgment of writes to other replica set members. MongoDB supports writing to a specific number of replicas. This mode also ensures that the write is written to the journal on the secondaries. Because replicas can be deployed across racks within data centers and across multiple data centers, ensuring writes propagate to additional replicas can provide extremely robust durability

- Majority: This write concern waits for the write to be applied to a majority of replica set members, and that the write is recorded in the journal on these replicas – including on the primary

Read Preferences

Reading from the primary replica is the default configuration as it guarantees consistency. Updates are typically replicated to secondaries quickly, depending on network latency. However, reads on the secondaries will not normally be consistent with reads on the primary. Note that the secondaries are not idle as they must process all writes replicated from the primary. To increase read capacity in your operational system consider sharding. Secondary reads can be useful for analytics and ETL applications as this approach will isolate traffic from operational workloads. You may choose to read from secondaries if your application can tolerate eventual consistency.

A very useful option is primaryPreferred, which issues reads to a secondary replica only if the primary is unavailable. This configuration allows for the continuous availability of reads during the short failover process.

For more on the subject of configurable reads, see the MongoDB Documentation page on replica set Read Preference.

Next Steps

That’s a wrap for part 3 of the MongoDB Atlas best practices blog series. In the final instalment, we’ll dive into best practices for operational management and ensuring data security

Remember, if you want to get a head start and learn about all of our recommendations now: