New Compression Options in MongoDB 3.0

MongoDB 3.0 introduces compression with the WiredTiger storage engine. In this post we will take a look at the different options, and show some examples of how the feature works. As always, YMMV, so we encourage you to test your own data and your own application.

Why compression?

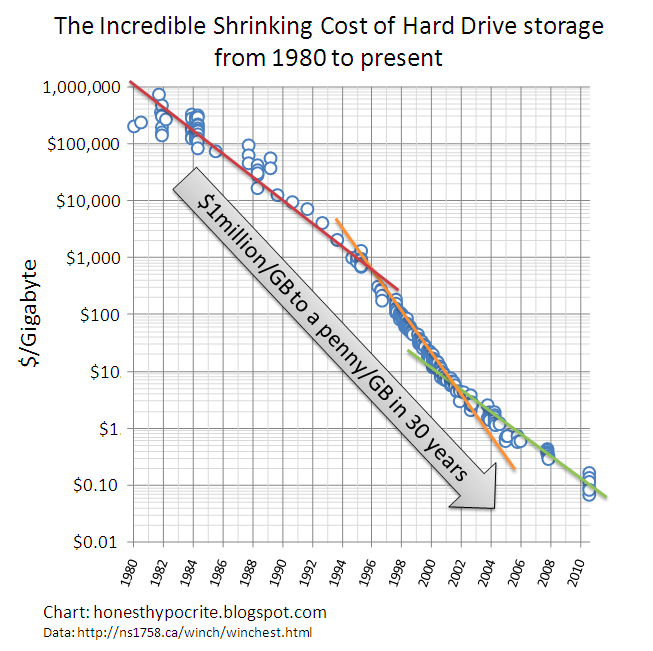

Everyone knows storage is cheap, right?

But chances are you’re adding data faster than storage prices are declining, so your net spend on storage is rising. Your internal costs might also incorporate management and other factors, so they may be significantly higher than commodity market prices. In other words, it still pays to look for ways to reduce your storage needs.

Size is one factor, and there are others. Disk I/O latency is dominated by seek time on rotational storage. By decreasing the size of the data, fewer disk seeks will be necessary to retrieve a given quantity of data, and disk I/O throughput will improve. In terms of RAM, some compressed formats can be used without decompressing the data in memory. In these cases more data can fit in RAM, which improves performance.

Storage properties of MongoDB

There are two important features related to storage that affect how space is used in MongoDB: BSON and dynamic schema.

MongoDB stores data in BSON, a binary encoding of JSON-like documents (BSON supports additional data types, such as dates, different types of numbers, binary). BSON is efficient to encode and decode, and it is easily traversable. However, BSON does not compress data, and it is possible its representation of data is actually larger than the JSON equivalent.

One of the things users love about MongoDB’s document data model is dynamic schema. In most databases, the schema is described and maintained centrally in a catalog or system tables. Column names are stored once for all rows. This approach is efficient in terms of space, but it requires all data to be structured according to the schema. In MongoDB there is currently no central catalog: each document is self-describing. New fields can be added to a document without affecting other documents, and without registering the fields in a central catalog.

The tradeoff is that with greater flexibility comes greater use of space. Field names are defined in every document. It is a best practice to use shorter field names when possible. However, it is also important not to take this too far – single letter field names or codes can obscure the field names, making it more difficult to use the data.

Fortunately, traditional schema is not the only way to be space efficient. Compression is very effective for repeating values like field names, as well as much of the data stored in documents.

There is no Universal Compression

Compression is all around us: images (JPEG, GIF), audio (mp3), video (MPEG), and most web servers compress web pages before sending to your browser using gzip. Compression algorithms have been around for decades, and there are competitions that award innovation.

Compression libraries rely on CPU and RAM to compress and decompress data, and each makes different tradeoffs in terms of compression rate, speed, and resource utilization. For example, one measure of today’s best compression library for text can compress 1GB of Wikipedia data to 124MB compared to 323MB for gzip, but it takes about almost 3,000 times longer and 30,000 times more memory to do so. Depending on your data and your application, one library may be much more effective for your needs than others.

MongoDB 3.0 introduces WiredTiger, a new storage engine that supports compression. WiredTiger manages disk I/O using pages. Each page contains many BSON documents. As pages are written to disk they are compressed by default, and when they are read into the cache from disk they are decompressed.

One of the basic concepts of compression is that repeating values – exact values as well as patterns – can be stored once in compressed form, reducing the total amount of space. Larger units of data tend to compress more effectively as there tend to be more repeating values. By compressing at the page level – commonly called block compression – WiredTiger can more efficiently compress data.

WiredTiger supports multiple compression libraries. You can decide which option is best for you at the collection level. This is an important option – your access patterns and your data could be quite different across collections. For example, if you’re using GridFS to store large documents such as images and videos, MongoDB automatically breaks the large files into many smaller “chunks” and reassembles them when requested. The implementation of GridFS maintains two collections: fs.files, which contains the metadata for the large files and their associated chunks, and fs.chunks, which contains the large data broken into 255KB chunks. With images and videos, compression will probably be beneficial for the fs.files collection, but the data contained in fs.chunks is probably already compressed, and so it may make sense to disable compression for this collection.

Compression options in MongoDB 3.0

In MongoDB 3.0, WiredTiger provides three compression options for collections:

- No compression

- Snappy (enabled by default) – very good compression, efficient use of resources

- zlib (similar to gzip) – excellent compression, but more resource intensive

There are two compression options for indexes:

- No compression

- Prefix (enabled by default) – good compression, efficient use of resources

You may wonder why the compression options for indexes are different than those for collections. Prefix compression is fairly simple – the “prefix” of values is deduplicated from the data set. This is especially effective for some data sets, like those with low cardinality (eg, country), or those with repeating values, like phone numbers, social security codes, and geo-coordinates. It is especially effective for compound indexes, where the first field is repeated with all the unique values of second field. Prefix indexes also provide one very important advantage over Snappy or zlib – queries operate directly on the compressed indexes, including covering queries.

When compressed collection data is accessed from disk, it is decompressed in cache. With prefix compression, indexes can remain compressed in RAM. We tend to see very good compression with indexes using prefix compression, which means that in most cases you can fit more of your indexes in RAM without sacrificing performance for reads, and with very modest impact to writes. The compression rate will vary significantly depending on the cardinality of your data and whether you use compound indexes.

Some things to keep in mind that apply to all the compression options in MongoDB 3.0:

- Random data does not compress well

- Binary data does not compress well (it may already be compressed)

- Text compresses especially well

- Field names compress well in documents (the additional benefits of short field names are modest)

Compression is enabled by default for collections and indexes in the WiredTiger storage engine. To explicitly set the compression for the replica at startup, specify the appropriate options in the YAML config file. use the command line option --wiredTigerCollectionBlockCompressor. Because WiredTiger is not the default storage engine in MongoDB 3.0, you’ll also need to specify the --storageEngine option to use WiredTiger and take advantage of these compression features.

To specify compression for specific collections, you’ll need to override the defaults by passing the appropriate options in the db.createCollection() command. For example, to create a collection called email using the zlib compression library:

db.createCollection( "email", { storageEngine: {

wiredTiger: { configString: "blockCompressor=zlib" }}})

How to measure compression

The best way to measure compression is to separately load the data with and without compression enabled, then compare the two sizes. The db.stats() command returns many different storage statistics, but the two that matter for this comparison are storageSize and indexSize. Values are returned in bytes, but you can convert to MB by passing in 1024*1024:

> db.stats(1024*1024).dataSize + db.stats(1024*1024).indexSize

1406.9201011657715

This is the method we used for the comparisons provided below.

Testing compression on different data sets

Let’s take a look at some different data sets to see how some of the compression options perform. We have four databases:

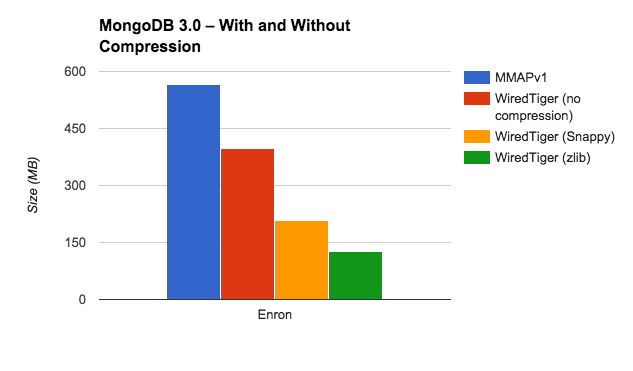

Enron

This is the infamous Enron email corpus. It includes about a half million emails. There’s a great deal of text in the email body fields, and some of the metadata has low cardinality, which means that they’re both likely to compress well. Here’s an example (the email body is truncated):

{

"_id" : ObjectId("4f16fc97d1e2d32371003e27"),

"body" : "",

"subFolder" : "notes_inbox",

"mailbox" : "bass-e",

"filename" : "450.",

"headers" : {

"X-cc" : "",

"From" : "michael.simmons@enron.com",

"Subject" : "Re: Plays and other information",

"X-Folder" : "\\Eric_Bass_Dec2000\\Notes Folders\\Notes inbox",

"Content-Transfer-Encoding" : "7bit",

"X-bcc" : "",

"To" : "eric.bass@enron.com",

"X-Origin" : "Bass-E",

"X-FileName" : "ebass.nsf",

"X-From" : "Michael Simmons",

"Date" : "Tue, 14 Nov 2000 08:22:00 -0800 (PST)",

"X-To" : "Eric Bass",

"Message-ID" : "<6884142.1075854677416.JavaMail.evans@thyme>",

"Content-Type" : "text/plain; charset=us-ascii",

"Mime-Version" : "1.0"

}

}

Here’s how the different options performed with the Enron database:

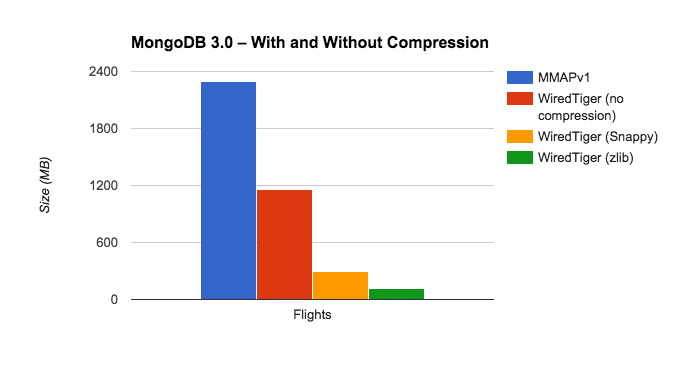

Flights

The US Federal Aviation Administration (FAA) provides data about on-time performance of airlines. Each flight is represented as a document. Many of the fields have low cardinality, so we express this data set to compress well:

{

"_id" : ObjectId("53d81b734aaa3856391da1fb"),

"origin" : {

"airport_seq_id" : 1247802,

"name" : "JFK",

"wac" : 22,

"state_fips" : 36,

"airport_id" : 12478,

"state_abr" : "NY",

"city_name" : "New York, NY",

"city_market_id" : 31703,

"state_nm" : "New York"

},

"arr" : {

"delay_group" : 0,

"time" : ISODate("2014-01-01T12:38:00Z"),

"del15" : 0,

"delay" : 13,

"delay_new" : 13,

"time_blk" : "1200-1259"

},

"crs_arr_time" : ISODate("2014-01-01T12:25:00Z"),

"delays" : {

"dep" : 14,

"arr" : 13

},

"taxi_in" : 5,

"distance_group" : 10,

"fl_date" : ISODate("2014-01-01T00:00:00Z"),

"actual_elapsed_time" : 384,

"wheels_off" : ISODate("2014-01-01T09:34:00Z"),

"fl_num" : 1,

"div_airport_landings" : 0,

"diverted" : 0,

"wheels_on" : ISODate("2014-01-01T12:33:00Z"),

"crs_elapsed_time" : 385,

"dest" : {

"airport_seq_id" : 1289203,

"state_nm" : "California",

"wac" : 91,

"state_fips" : 6,

"airport_id" : 12892,

"state_abr" : "CA",

"city_name" : "Los Angeles, CA",

"city_market_id" : 32575

},

"crs_dep_time" : ISODate("2014-01-01T09:00:00Z"),

"cancelled" : 0,

"unique_carrier" : "AA",

"taxi_out" : 20,

"tail_num" : "N338AA",

"air_time" : 359,

"carrier" : "AA",

"airline_id" : 19805,

"dep" : {

"delay_group" : 0,

"time" : ISODate("2014-01-01T09:14:00Z"),

"del15" : 0,

"delay" : 14,

"delay_new" : 14,

"time_blk" : "0900-0959"

},

"distance" : 2475

}

Here’s how the different options performed with the Flights database:

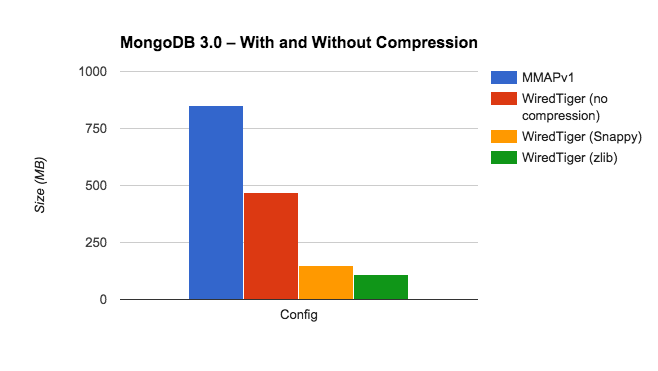

MongoDB Config Database

This is the metadata MongoDB stores in the config database for sharded clusters. The manual describes the various collections in that database. Here’s an example from the chunks collection, which stores a document for each chunk in the cluster:

{

"_id" : "mydb.foo-a_\"cat\"",

"lastmod" : Timestamp(1000, 3),

"lastmodEpoch" : ObjectId("5078407bd58b175c5c225fdc"),

"ns" : "mydb.foo",

"min" : {

"animal" : "cat"

},

"max" : {

"animal" : "dog"

},

"shard" : "shard0004"

}

Here’s how the different options performed with the config database:

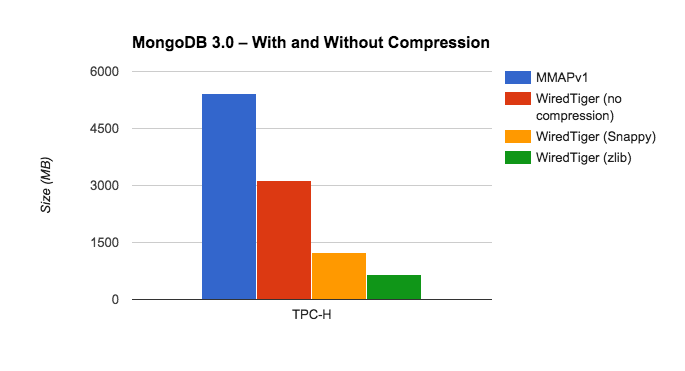

TPC-H

TPC-H is a classic benchmark used for testing relational analytical DBMS. The schema has been modified to use MongoDB’s document model. Here’s an example from the orders table with only the first of many line items displayed for this order:

{

"_id" : 1,

"cname" : "Customer#000036901",

"status" : "O",

"totalprice" : 173665.47,

"orderdate" : ISODate("1996-01-02T00:00:00Z"),

"comment" : "instructions sleep furiously among ",

"lineitems" : [

{

"lineitem" : 1,

"mfgr" : "Manufacturer#4",

"brand" : "Brand#44",

"type" : "PROMO BRUSHED NICKEL",

"container" : "JUMBO JAR",

"quantity" : 17,

"returnflag" : "N",

"linestatus" : "O",

"extprice" : 21168.23,

"discount" : 0.04,

"shipinstr" : "DELIVER IN PERSON",

"realPrice" : 20321.5008,

"shipmode" : "TRUCK",

"commitDate" : ISODate("1996-02-12T00:00:00Z"),

"shipDate" : ISODate("1996-03-13T00:00:00Z"),

"receiptDate" : ISODate("1996-03-22T00:00:00Z"),

"tax" : 0.02,

"size" : 9,

"nation" : "UNITED KINGDOM",

"region" : "EUROPE"

}

]

}

Here’s how the different options performed with the TPC-H database:

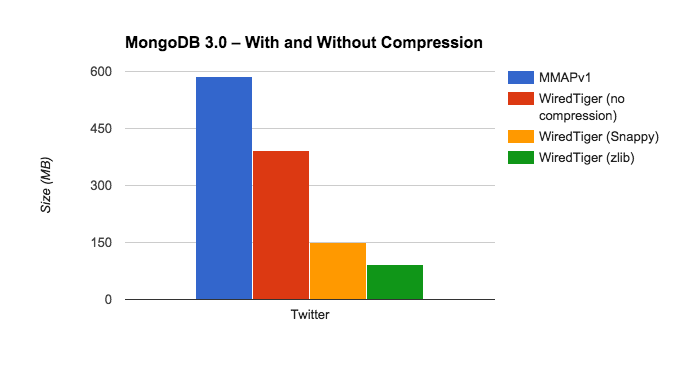

Twitter

This is a database of 200K tweets. Here’s a simulated tweet introducing our Java 3.0 driver:

{

"coordinates": null,

"created_at": "Fri April 25 16:02:46 +0000 2010",

"favorited": false,

"truncated": false,

"id_str": "",

"entities": {

"urls": [

{

"expanded_url": null,

"url": "http://mongodb.com",

"indices": [

69,

100

]

}

],

"hashtags": [ ],

"user_mentions": [

{

"name": "MongoDB",

"id_str": "",

"id": null,

"indices": [

25,

30

],

"screen_name": "MongoDB"

}

]

},

"in_reply_to_user_id_str": null,

"text": "Introducing the #Java 3.0 driver for #MongoDB http://buff.ly/1DmMTKu",

"contributors": null,

"id": null,

"retweet_count": 12,

"in_reply_to_status_id_str": null,

"geo": null,

"retweeted": true,

"in_reply_to_user_id": null,

"user": {

"profile_sidebar_border_color": "C0DEED",

"name": "MongoDB",

"profile_sidebar_fill_color": "DDEEF6",

"profile_background_tile": false,

"profile_image_url": "",

"location": "New York, NY",

"created_at": "Fri April 25 23:22:09 +0000 2008",

"id_str": "",

"follow_request_sent": false,

"profile_link_color": "",

"favourites_count": 1,

"url": "http://mongodb.com",

"contributors_enabled": false,

"utc_offset": -25200,

"id": null,

"profile_use_background_image": true,

"listed_count": null,

"protected": false,

"lang": "en",

"profile_text_color": "",

"followers_count": 159678,

"time_zone": "Eastern Time (US & Canada)",

"verified": false,

"geo_enabled": true,

"profile_background_color": "",

"notifications": false,

"description": "Community conversation around the MongoDB software. For official company news, follow @mongodbinc.",

"friends_count": ,

"profile_background_image_url": "",

"statuses_count": 7311,

"screen_name": "MongoDB",

"following": false,

"show_all_inline_media": false

},

"in_reply_to_screen_name": null,

"source": "web",

"place": null,

"in_reply_to_status_id": null

}

Here’s how the different options performed with the Twitter database:

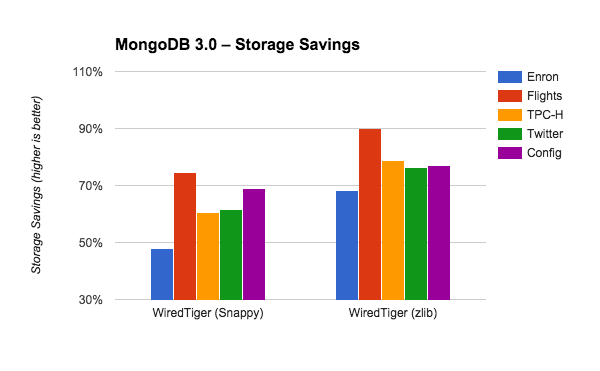

Comparing compression rates

The varying sizes of these databases make them difficult to compare side by side in terms of absolute size. We can take a closer look at the benefits by comparing the storage savings provided by each option. To do this, we compare the size of each database using Snappy and zlib to the uncompressed size in WiredTiger. As above, we’re adding the value of storageSize and indexSize.

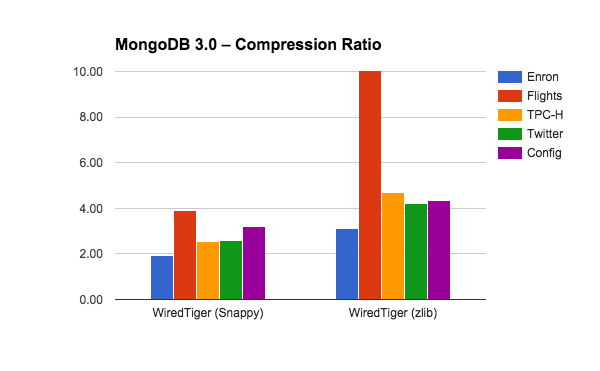

Another way some people describe the benefits of compression is in terms of the ratio of the uncompressed size to the compressed size. Here’s how Snappy and zlib perform across the five databases.

How to test your own data

There are two simple ways for you to test compression with your data in MongoDB 3.0.

If you’ve already upgraded to MongoDB 3.0, you can simply add a new secondary to your replica set with the option to use the WiredTiger storage engine specified at startup. While you’re at it, make this replica hidden with 0 votes so that it won’t affect your deployment. This new replica set member will perform an initial sync with one of your existing secondaries. After the initial sync is complete, you can remove the WiredTiger replica from your replica set then connect to that standalone to compare the size of your databases as described above. For each compression option you want to test, you can repeat this process.

Another option is to take a mongodump of your data and use that to restore it into a standalone MongoDB 3.0 instance. By default your collections will use the Snappy compression option, but you can specify different options by first creating the collections with the appropriate setting before running mongorestore, or by starting mongod with different compression options. This approach has the advantage of being able to dump/restore only specific databases, collections, or subsets of collections to perform your testing.

For examples of syntax for setting compression options, see the section “How to use compression.”

A note on capped collections

Capped collections are implemented very differently in the MMAP storage engines as compared to WiredTiger (and RocksDB). In MMAP space is allocated for the capped collection at creation time, whereas for WiredTiger and RocksDB space is only allocated as data is added to the capped collection. If you have many empty or mostly-empty capped collections, comparisons between the different storage engines may be somewhat misleading for this reason.

If you’re considering updating your version of MongoDB, take a look at our Major Version Upgrade consulting services:

Asya is Lead Product Manager at MongoDB. She joined MongoDB as one of the company's first Solutions Architects. Prior to MongoDB, Asya spent seven years in similar positions at Coverity, a leading development testing company. Before that she spent twelve years working with databases as a developer, DBA, data architect and data warehousing specialist.