HI,

I am following the documentation at Deploying A MongoDB Cluster With Docker | MongoDB

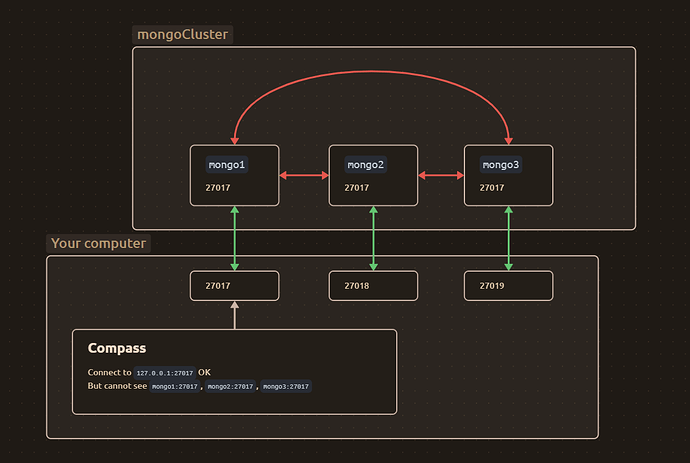

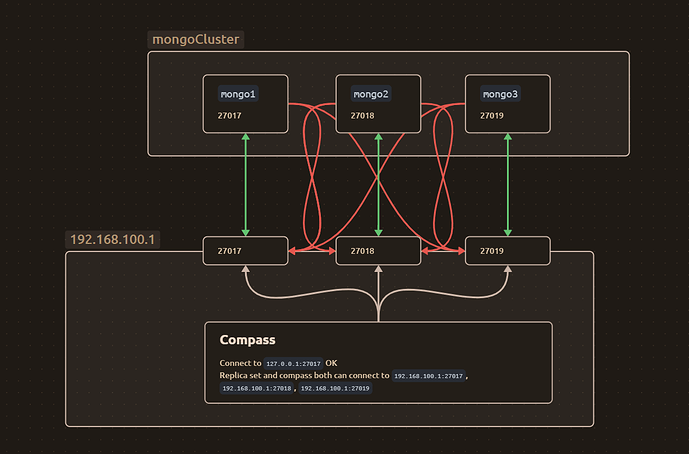

in a mac system running nerdctl as command line client to connect to containerd runtime.

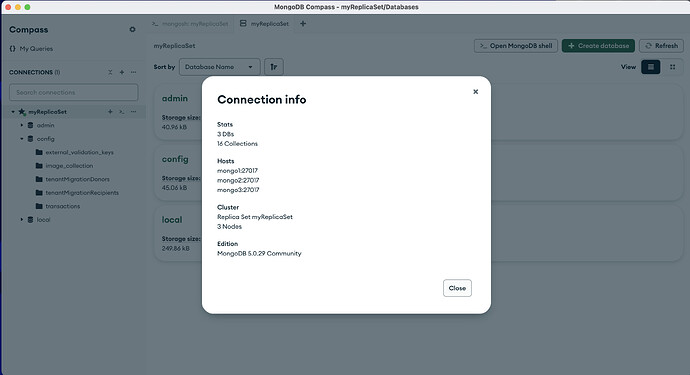

I am able to complete the setup properly and connect to the database from compass.

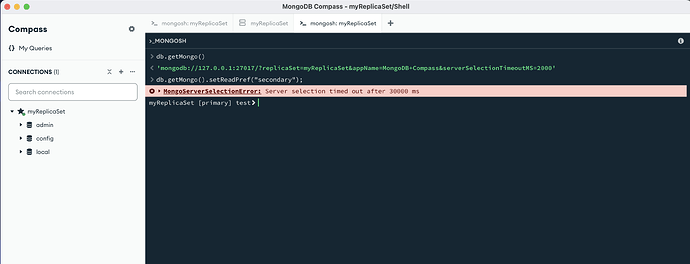

But when I try to set the read preference as secondary, it just times out.

rjohn@FIVE9-DYFPDQ0WXH ~ % lima nerdctl exec -it mongo1 mongosh --eval "rs.status()"

{

set: 'myReplicaSet',

date: ISODate('2024-10-11T03:45:07.595Z'),

myState: 1,

term: Long('1'),

syncSourceHost: '',

syncSourceId: -1,

heartbeatIntervalMillis: Long('2000'),

majorityVoteCount: 2,

writeMajorityCount: 2,

votingMembersCount: 3,

writableVotingMembersCount: 3,

optimes: {

lastCommittedOpTime: { ts: Timestamp({ t: 1728618307, i: 1 }), t: Long('1') },

lastCommittedWallTime: ISODate('2024-10-11T03:45:07.056Z'),

readConcernMajorityOpTime: { ts: Timestamp({ t: 1728618307, i: 1 }), t: Long('1') },

appliedOpTime: { ts: Timestamp({ t: 1728618307, i: 1 }), t: Long('1') },

durableOpTime: { ts: Timestamp({ t: 1728618307, i: 1 }), t: Long('1') },

lastAppliedWallTime: ISODate('2024-10-11T03:45:07.056Z'),

lastDurableWallTime: ISODate('2024-10-11T03:45:07.056Z')

},

lastStableRecoveryTimestamp: Timestamp({ t: 1728618287, i: 1 }),

electionCandidateMetrics: {

lastElectionReason: 'electionTimeout',

lastElectionDate: ISODate('2024-10-11T03:30:06.905Z'),

electionTerm: Long('1'),

lastCommittedOpTimeAtElection: { ts: Timestamp({ t: 1728617396, i: 1 }), t: Long('-1') },

lastSeenOpTimeAtElection: { ts: Timestamp({ t: 1728617396, i: 1 }), t: Long('-1') },

numVotesNeeded: 2,

priorityAtElection: 1,

electionTimeoutMillis: Long('10000'),

numCatchUpOps: Long('0'),

newTermStartDate: ISODate('2024-10-11T03:30:06.954Z'),

wMajorityWriteAvailabilityDate: ISODate('2024-10-11T03:30:08.126Z')

},

members: [

{

_id: 0,

name: 'mongo1:27017',

health: 1,

state: 1,

stateStr: 'PRIMARY',

uptime: 947,

optime: { ts: Timestamp({ t: 1728618307, i: 1 }), t: Long('1') },

optimeDate: ISODate('2024-10-11T03:45:07.000Z'),

lastAppliedWallTime: ISODate('2024-10-11T03:45:07.056Z'),

lastDurableWallTime: ISODate('2024-10-11T03:45:07.056Z'),

syncSourceHost: '',

syncSourceId: -1,

infoMessage: '',

electionTime: Timestamp({ t: 1728617406, i: 1 }),

electionDate: ISODate('2024-10-11T03:30:06.000Z'),

configVersion: 1,

configTerm: 1,

self: true,

lastHeartbeatMessage: ''

},

{

_id: 1,

name: 'mongo2:27017',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 911,

optime: { ts: Timestamp({ t: 1728618297, i: 1 }), t: Long('1') },

optimeDurable: { ts: Timestamp({ t: 1728618297, i: 1 }), t: Long('1') },

optimeDate: ISODate('2024-10-11T03:44:57.000Z'),

optimeDurableDate: ISODate('2024-10-11T03:44:57.000Z'),

lastAppliedWallTime: ISODate('2024-10-11T03:45:07.056Z'),

lastDurableWallTime: ISODate('2024-10-11T03:45:07.056Z'),

lastHeartbeat: ISODate('2024-10-11T03:45:05.956Z'),

lastHeartbeatRecv: ISODate('2024-10-11T03:45:07.189Z'),

pingMs: Long('0'),

lastHeartbeatMessage: '',

syncSourceHost: 'mongo1:27017',

syncSourceId: 0,

infoMessage: '',

configVersion: 1,

configTerm: 1

},

{

_id: 2,

name: 'mongo3:27017',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 911,

optime: { ts: Timestamp({ t: 1728618297, i: 1 }), t: Long('1') },

optimeDurable: { ts: Timestamp({ t: 1728618297, i: 1 }), t: Long('1') },

optimeDate: ISODate('2024-10-11T03:44:57.000Z'),

optimeDurableDate: ISODate('2024-10-11T03:44:57.000Z'),

lastAppliedWallTime: ISODate('2024-10-11T03:45:07.056Z'),

lastDurableWallTime: ISODate('2024-10-11T03:45:07.056Z'),

lastHeartbeat: ISODate('2024-10-11T03:45:05.957Z'),

lastHeartbeatRecv: ISODate('2024-10-11T03:45:07.189Z'),

pingMs: Long('0'),

lastHeartbeatMessage: '',

syncSourceHost: 'mongo1:27017',

syncSourceId: 0,

infoMessage: '',

configVersion: 1,

configTerm: 1

}

],

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1728618307, i: 1 }),

signature: {

hash: Binary.createFromBase64('AAAAAAAAAAAAAAAAAAAAAAAAAAA=', 0),

keyId: Long('0')

}

},

operationTime: Timestamp({ t: 1728618307, i: 1 })

}

when I try setReadPref(“secondary”), it just times out.

Could you please tell me where I am going wrong.